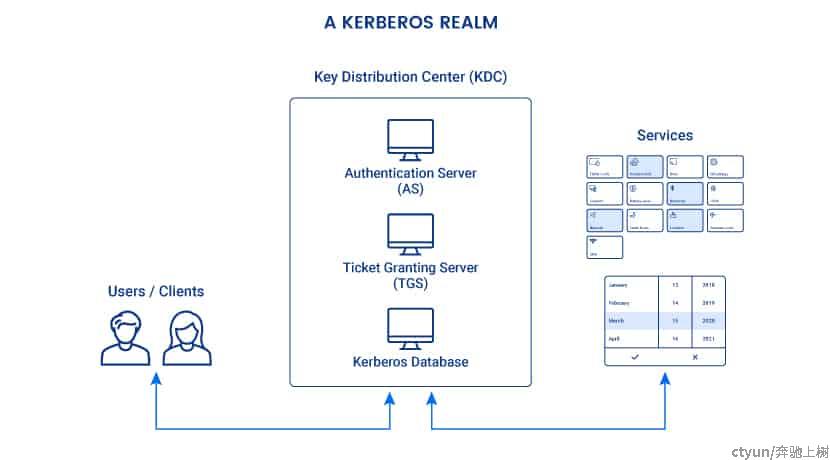

架构设计

服务端整体分为三部分:

- An authentication server (AS): The AS performs initial authentication when a user wants to access a service.

- A ticket granting server (TGS): This server connects a user with the service server (SS).

- A Kerberos database: This database stores IDs and passwords of verified users.

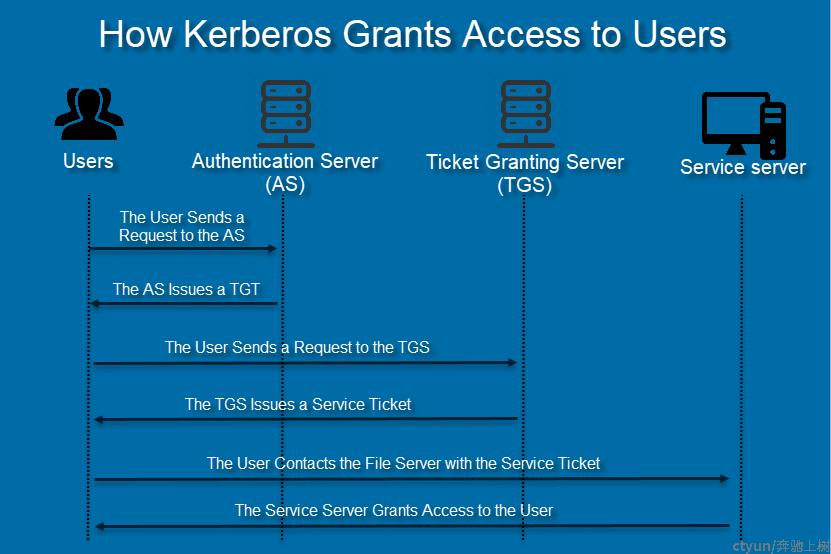

交互流程图

认证抓包过程参考:

https://www.golinuxcloud.com/kerberos-auth-packet-analysis-wireshark/

客户端命令

AS认证

在linux终端上,

认证命令

kinit -kt /etc/security/keytabs/hdfs.keytab hdfs/_HOST

上面使用kinit和keytab文件,进行认证,认证后客户端会获取到一个TGT

查看认证的信息:

klist

返回示例:

Ticket cache: FILE:/tmp/krb5cc_1006

Default principal: hdfs/hadoop001@EXAMPLE.CN Valid starting Expires Service principal

07/25/2023 16:33:45 07/26/2023 16:33:45 krbtgt/EXAMPLE.CN@EXAMPLE.CN renew until 08/01/2023 16:33:45

返回信息中回说明凭证位置:/tmp/krb5cc_1006,后面的1006是当前用户的系统id,uid

过期时间,图中过期时间是2023年7月26号 16:33:45,过了这个时间就需要重新kinit认证

如果服务端开启了pre-auth验证,在认证成功中,会从服务端看到两条日志

Jul 25 16:33:45 hadoop001 krb5kdc[8654](info): AS_REQ (8 etypes {18 17 16 23 25 26 20 19}) 10.30.19.8: NEEDED_PREAUTH: hdfs/hadoop001 @EXAMPLE.CN for krbtgt/EXAMPLE.CN@EXAMPLE.CN, Additional pre-authentication required

Jul 25 16:33:45 hadoop001 krb5kdc[8661](info): AS_REQ (8 etypes {18 17 16 23 25 26 20 19}) 10.30.19.8: ISSUE: authtime 1690274025, etypes {rep=18 tkt=18 ses=18}, hdfs/hadoop001 @EXAMPLE.CN for krbtgt/EXAMPLE.CN

观察日志,可以看到第一次提示需要pre-auth,服务端会直接返回pre-auth错误信息给客户端,客户端待着pre-auth的信息第二次认证就成功了

默认pre-auth是不开启的,需要手动加上,为了更安全的网络访问

默认配置文件位置:/var/kerberos/krb5kdc/kdc.conf

配置内容如下:

[realms] EXAMPLE.CN = {

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

max_life = 1d 0h 0m 0s

max_renewable_life = 7d 0h 0m 0s

default_principal_flags = +preauth

iprop_enable = true

iprop_port = 744

}

更多附加参数可参考官网:

https://web.mit.edu/kerberos/krb5-1.12/doc/admin/conf_files/kdc_conf.html

TGS认证

关于tgs认证,需要客户端带着as认证成功后的凭证并且进行加密请求,具体的处理过程比较繁琐,需要更高层的api对这个进行包装处理

模拟这一步

使用hdfs命令验证TGS认证请求

在大数据集群中hdfs数据节点中使用命令

hdfs dfs -ls /

Jul 25 16:51:48 hadoop001 krb5kdc[8653](info): TGS_REQ (4 etypes {18 17 16 23}) 10.30.19.8: ISSUE: authtime 1690274025, etypes {rep=18 tkt=18 ses=18}, hdfs/hadoop001@EXAMPLE.CN for hdfs/hadoop002@EXAMPLE.CN

Jul 25 16:51:48 hadoop001 krb5kdc[8661](info): TGS_REQ (4 etypes {18 17 16 23}) 10.30.19.8: ISSUE: authtime 1690274025, etypes {rep=18 tkt=18 ses=18}, hdfs/hadoop001@EXAMPLE.CN for hdfs/hadoop003@EXAMPLE.CN

在kerberos服务端kdc日志中可以看到TGS_REQ日志打印,并且得到hdfs命令结果,相当于这里同时完成了TGS和AP的两步认证结果

此时去namenode查看日志,可以看到请求的日志

2023-07-25 17:09:12,706 | INFO | SecurityLogger.org.apache.hadoop.ipc.Server | Auth successful for hdfs/hadoop001@EXAMPLE.CN (auth:KERBEROS) from 127.0.0.1:31026 2023-07-25 17:09:12,717 | INFO | SecurityLogger.org.apache.hadoop.security.authorize.ServiceAuthorizationManager | Authorization successful for hdfs/hadoop001@EXAMPLE (auth:KERBEROS) for protocol=interface org.apache.hadoop.hdfs.protocol.ClientProtocol

源码分析

从上一步中看到,hdfs执行中直接完成了TGS认证以及AP认证,可以知道相关处理细节应该是被hadoop的包进行封装了处理

认证源码类:UserGroupInformation

/**

* User and group information for Hadoop.

* This class wraps around a JAAS Subject and provides methods to determine the

* user's username and groups. It supports both the Windows, Unix and Kerberos

* login modules.

*/

目前开源中关于kerberos直接认证测试的工具暂时没有找到,一般需要封装接口开发,针对于java开发人员来说

或者针对C语言开发者,直接在源码基础细节上进行二次开发,相对来说开发工作还是有一定开发工作量的