(一)kata containers介绍

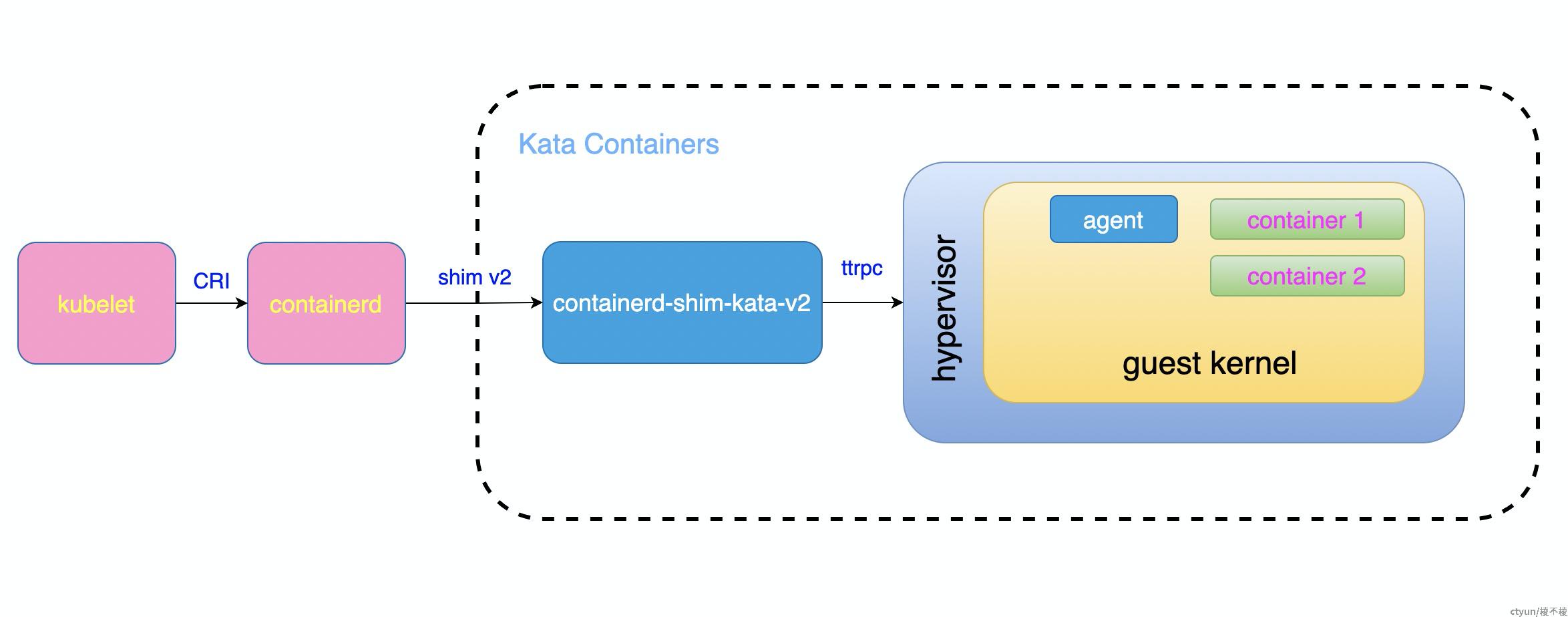

Kata Containers 架构

Kata Containers 架构可以概括地使用下图进行说明:

在以上图中,我们可以将整个生态系统分为 3 部分:

- 容器调度系统,如kubelet;

- 上层 runtime,这一层主要是实现了 CRI 接口,然后使用下层 runtime 对容器进行管理。上层 runtime 典型代表有 containerd 和 CRI-O,图中为containerd;

- 下层 runtime,这一层才会直接负责容器的管理,典型代表为 runc 和 Kata Containers,这里指的是kata containers的

containerd-shim-kata-v2程序。

Kata Containers 会接收来自上层 runtime 的请求,实现容器的创建、删除等管理工作。

同时,上图中也有 3 个通信协议存在:

- CRI: 容器运行时接口,这是 k8s(实际上是 kubelet)和 上层 runtime 之间的通信接口

- shim v2:上层 runtime (如 containerd )和 下层 runtime(如 Kata Containers ) 之间的通信接口

- agent 协议:这是 Kata Containers 内部的协议,用于 Kata Containers 的 shim 进程和 guest 内的 agent 之间的通信。

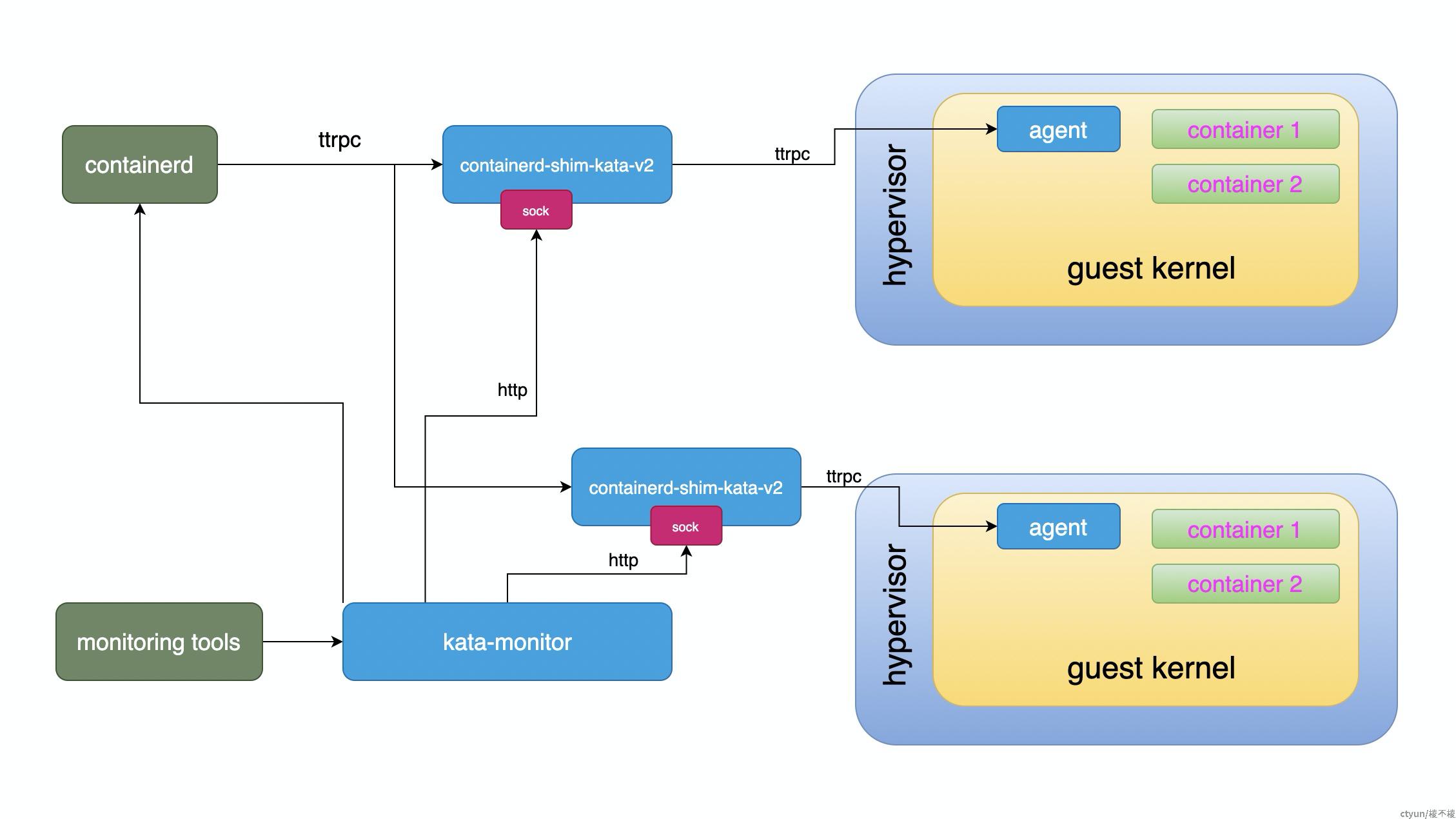

kata containers的Runtime进程说明

runtime 为运行在宿主机上的、支持 shim v2 协议的进程。多数情况下可以将 runtime 、shimv2 视为同一内容。

Runtime 大致架构如下图所示:

整个 Runtime ,其可执行程序为 containerd-shim-kata-v2,也即 shim 进程,这也是 Kata Containers 的入口点。Runtime 对上接受 containerd 的请求(通过 shimv2 “协议”),对 guest 来说,通过 guest 内的 agent 来控制 guest 内容器的创建、删除等管理。

Runtime 和 agent 之间的采用了 ttrpc 协议通信,这是一个使用了 protocol buffer 编解码方式的类似 gRPC 的通信方式。该协议由 containerd 创建,用于 containerd 和底层 runtime 之间的通信。在 Kata Containers 中, runtime 和 agent 也通过 ttrpc 通信。

agent进程说明

agent 可以作为 guest init 进程启动,也可以使用 systemd 等作为 init ,agent 作为普通进程启动。

agent 程序有两个作用:

- ttrpc服务:作为 agent 服务,通过 ttrpc 响应来自 shim 进程的请求

- 启动容器:启动容器时,agent 会通过运行 agent 二进制在新的进程中启动容器

(二)kata容器镜像替换

kata虚拟机原镜像是clear-linux,该系统是没有bash命令,无法通过ssh进入系统中,需重新编译kata容器镜像。

步骤如下:

1)从github拉取代码,使用ubuntu作为kata的基础系统,构建得到kata-ubuntu.image系统镜像;

2)配置kata使用kata-ubuntu.image系统镜像。即拷贝kata-ubuntu.image系统镜像到对应k8s节点的/opt/kata/share/kata-containers/目录

[ctgcdt@eaci-3 kata-containers]$ sudo cp /home/ctgcdt/szj/kata-ubuntu.image .

[ctgcdt@eaci-3 kata-containers]$ ls

config-5.15.48 kata-clearlinux-latest.image kata-containers-initrd.img vmlinux-5.15.48-93 vmlinux-upcall-5.10-90 vmlinuz.container

kata-alpine-3.15.initrd kata-containers.img kata-ubuntu.image vmlinux.container vmlinuz-5.15.48-93

[ctgcdt@eaci-3 kata-containers]$ ll kata-containers.img

lrwxrwxrwx. 1 1001 121 28 Aug 17 2022 kata-containers.img -> kata-clearlinux-latest.image

[ctgcdt@eaci-3 kata-containers]$ 3)删除kata-containers.img软链接,重新创建kata-ubuntu.image的软链接

[ctgcdt@eaci-3 kata-containers]$ sudo rm -f kata-containers.img

[ctgcdt@eaci-3 kata-containers]$ sudo ln -s kata-ubuntu.image kata-containers.img

[ctgcdt@eaci-3 kata-containers]$ ll kata-containers.img

lrwxrwxrwx. 1 root root 17 Jul 6 23:16 kata-containers.img -> kata-ubuntu.image4)开启debug_console_enabled。修改/opt/kata/share/defaults/kata-containers/configuration.toml文件或configuration-clh-upcall.toml文件

[ctgcdt@eaci-2 kata-containers]$ grep "debug_console_enabled" /opt/kata/share/defaults/kata-containers/configuration.toml

#debug_console_enabled = true

[ctgcdt@eaci-2 kata-containers]$ sudo sed -i "s#\#debug_console_enabled#debug_console_enabled#g" /opt/kata/share/defaults/kata-containers/configuration.toml

[ctgcdt@eaci-2 kata-containers]$ grep "debug_console_enabled" /opt/kata/share/defaults/kata-containers/configuration.toml

debug_console_enabled = true

[ctgcdt@eaci-2 kata-containers]$ grep "console" /opt/kata/share/defaults/kata-containers/configuration-clh-upcall.toml

# Enable debug console.

debug_console_enabled = true(三)kata容器测试

1)创建kata容器

在控制台创建容器组,查看该容器已经成功创建到eaci-2节点:

$ kubectl get pod -A -owide|grep eaci-5hxfla-szj

ns-tenant-3671 eaci-5hxfla-szj 2)找到kata容器的sandboxid,有多个容器的话取随便一个容器id都可以,在主机中的进程都是同一个,因为主机看不到kata虚机中的容器。

$ sudo crictl ps |grep eaci-5hxfla-szj

29164a6d9fec7 d3cd072556c21 32 seconds ago Running container-2-szj 0 727bec446ca43 eaci-5hxfla-szj

f23b8ae61df1f b3c5c59017fbb 35 seconds ago Running container-1-szj 0 727bec446ca43 eaci-5hxfla-szj

[ctgcdt@eaci-2 kata-containers]$ sudo crictl inspect f23b8ae61df1f |grep cgroupsPath -A 10

"cgroupsPath": "kubepods-besteffort-podee1c3e49_0c4d_4535_a640_7a52aebfc862.slice:cri-containerd:f23b8ae61df1f0a41a00208f505380e8472bacffa8af1435be336721809f29e9",

"namespaces": [

{

"type": "pid"

},

{

"type": "ipc",

"path": "/proc/26072/ns/ipc"

},

[ctgcdt@eaci-2 kata-containers]$ ps -ef|grep 26072

root 26072 26040 1 11:12 ? 00:00:00 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/clh-api.sock

ctgcdt 34086 38923 0 11:13 pts/0 00:00:00 grep --color=auto 26072

[ctgcdt@eaci-2 kata-containers]$ ps -ef|grep 26040

root 26040 1 1 11:12 ? 00:00:01 /opt/kata/bin/containerd-shim-kata-v2 -namespace k8s.io -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id 727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41 -debug

root 26071 26040 0 11:12 ? 00:00:00 /opt/kata/libexec/virtiofsd --syslog -o cache=auto -o no_posix_lock -o source=/run/kata-containers/shared/sandboxes/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/shared --fd=3 -f --thread-pool-size=1 -o announce_submounts

root 26072 26040 1 11:12 ? 00:00:01 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/clh-api.sock

ctgcdt 34954 38923 0 11:13 pts/0 00:00:00 grep --color=auto 26040可知,pod中的container-1-szj容器的sandox的id为727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41。

3)执行如下命令我们就可以进入到kata虚拟机中

$ sudo /opt/kata/bin/kata-runtime exec 727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

root@localhost:/#看一下里面的文件系统情况:

root@localhost:/# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/root ext4 117M 102M 8.5M 93% /

devtmpfs devtmpfs 1.5G 0 1.5G 0% /dev

tmpfs tmpfs 1.5G 0 1.5G 0% /dev/shm

tmpfs tmpfs 301M 28K 301M 1% /run

tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs tmpfs 1.5G 0 1.5G 0% /sys/fs/cgroup

tmpfs tmpfs 1.5G 0 1.5G 0% /tmp

kataShared virtiofs 252G 4.1G 248G 2% /run/kata-containers/shared/containers

shm tmpfs 1.5G 0 1.5G 0% /run/kata-containers/sandbox/shm

none virtiofs 879G 135G 700G 17% /run/kata-containers/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/rootfs

none virtiofs 879G 135G 700G 17% /run/kata-containers/f23b8ae61df1f0a41a00208f505380e8472bacffa8af1435be336721809f29e9/rootfs

none virtiofs 879G 135G 700G 17% /run/kata-containers/29164a6d9fec7f05f551679eac34126b60dc5f7b750effda1bebca21c19d4484/rootfs可以看到,kata虚机中存在3个容器。

4)我们分别来看一下容器的cgroup目录,可以看到容器相关指标信息都在cgroup的cri-containerd目录下

root@localhost:/# find /sys/fs/cgroup/ -name "727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41*"

/sys/fs/cgroup/cpuset/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/memory/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/blkio/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/pids/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/perf_event/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/net_cls,net_prio/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/freezer/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/devices/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/cpu,cpuacct/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41

/sys/fs/cgroup/systemd/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41查看容器croup目录下的指标数据:

root@localhost:/# ls /sys/fs/cgroup/cpu/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/

cgroup.clone_children cpuacct.stat cpuacct.usage_sys

cgroup.procs cpuacct.usage cpuacct.usage_user

cpu.cfs_period_us cpuacct.usage_all notify_on_release

cpu.cfs_quota_us cpuacct.usage_percpu tasks

cpu.shares cpuacct.usage_percpu_sys

cpu.stat cpuacct.usage_percpu_user

root@localhost:/# cat /sys/fs/cgroup/cpu/cri-containerd/727bec446ca43ddbdff7546481f88aa2c6b79d79c0822ced700367e24cc15a41/cpu.shares

2其中,cpu.shares 中保存的就是限制的数值,还有很多其他的指标数据。

5)对比runc容器的cgroup目录

我们先来看一下runc容器的cgroup目录。在30集群的master主机上查看,随便选一个正常的pod,找到其容器id

$ kubectl get pod -owide nginx-szj-deploy-595479989b-n8sws

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-szj-deploy-595479989b-n8sws 1/1 Running 12 81d 172.26.222.3 10.50.208.30 <none> <none>

[docker@dev30 cgroup]$ kubectl describe pod nginx-szj-deploy-595479989b-n8sws |grep "Container ID" -C 5

IPs:

IP: 172.26.222.3

Controlled By: ReplicaSet/nginx-szj-deploy-595479989b

Containers:

nginx-szj:

Container ID: docker://7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e

Image: nginx:1.23.3

Image ID: docker-pullable://10.50.208.30:30012/jiangjietest/nginx@sha256:7f797701ded5055676d656f11071f84e2888548a2e7ed12a4977c28ef6114b17

Port: <none>

Host Port: <none>

State: Running使用docker inspect找到该容器的croup目录

[docker@dev30 cgroup]$ docker inspect 7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e |grep Cgroup

"CgroupnsMode": "host",

"Cgroup": "",

"CgroupParent": "kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice",

"DeviceCgroupRules": null,

查看容器croup目录下的相关目录

$ find /sys/fs/cgroup/ -name "kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice*"

/sys/fs/cgroup/perf_event/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/cpuset/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/devices/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/blkio/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/freezer/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/hugetlb/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/net_cls,net_prio/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/cpu,cpuacct/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/pids/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/rdma/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice

/sys/fs/cgroup/systemd/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice也可以用容器id来查找

$ find /sys/fs/cgroup/ -name "*7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e*"

/sys/fs/cgroup/perf_event/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/cpuset/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/devices/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/blkio/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/freezer/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/hugetlb/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/net_cls,net_prio/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/cpu,cpuacct/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/pids/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/rdma/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

/sys/fs/cgroup/systemd/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope

查看容器cgroup目录下的指标数据

$ ls /sys/fs/cgroup/cpu/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope/

cgroup.clone_children cpuacct.stat cpuacct.usage_all cpuacct.usage_percpu_sys cpuacct.usage_sys cpu.cfs_period_us cpu.rt_period_us cpu.shares notify_on_release

cgroup.procs cpuacct.usage cpuacct.usage_percpu cpuacct.usage_percpu_user cpuacct.usage_user cpu.cfs_quota_us cpu.rt_runtime_us cpu.stat tasks

[docker@dev30 cgroup]$ cat /sys/fs/cgroup/cpu/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod33e37d43_8fcc_42f9_b89a_bc9ca55220b5.slice/docker-7ef5f0908c9e63a403d2d349a868b41e63e23eea598ad858f251a1c6ca391e0e.scope/cpu.shares

102通过对比可知,两种容器的cgoup的路径不一样,但是kata容器的cgroup下的指标数据与runc容器的cgoup下的指标数据基本一致。

6)在kata容器中部署cAdvisor

在普通k8s集群创建工作负载,使用yaml方式创建,添加虚拟节点标签,使其调度到虚拟节点,会使用kata容器创建cAdvisor的pod。

创建cAdvisor pod的yaml如下:

apiVersion: "apps/v1"

kind: "Deployment"

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

generation: 1

name: "cadvisor"

namespace: "default"

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

name: "cadvisor"

strategy:

rollingUpdate:

maxSurge: "25%"

maxUnavailable: "25%"

type: "RollingUpdate"

template:

metadata:

labels:

app: ""

ccse_app_name: ""

kubernetes.io/qos: "None"

name: "cadvisor"

source: "CCSE"

ccse.ctyun.cn/vnode: "true"

spec:

containers:

- image: "registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-01:1.22.0"

imagePullPolicy: "IfNotPresent"

name: "container1-nginx"

ports:

- containerPort: 80

protocol: "TCP"

resources:

limits:

cpu: "100m"

memory: "256Mi"

requests:

cpu: "100m"

memory: "128Mi"

- image: "registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-04-cadvisor:latest"

imagePullPolicy: "IfNotPresent"

name: "cadvisor"

resourrequests:

memory: 400Mi

cpu: 400m

limits:

memory: 2000Mi

cpu: 800mces:

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

readOnly: true

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

- name: disk

mountPath: /dev/disk

readOnly: true

ports:

- name: test

containerPort: 8080

protocol: TCP

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /var/lib/docker

- name: disk

hostPath:

path: /dev/disk

这里在一个pod中创建了2个容器,一个cadvisor,一个nginx。查看进程

[ctgcdt@eaci-2 kata-containers]$ sudo crictl ps |grep vnode-02-cubecni-default-cadvisor-7f6

6dac3b2051470 9b7cc99821098 3 hours ago Running kube-proxy 0 8b855dee7ae52 vnode-02-cubecni-default-cadvisor-7f67df57bd-rjf9g

2a6ccbc5457d3 eb12107075737 3 hours ago Running cadvisor 0 8b855dee7ae52 vnode-02-cubecni-default-cadvisor-7f67df57bd-rjf9g

6ad795788a5a0 b3c5c59017fbb 3 hours ago Running container1-nginx 0 8b855dee7ae52 vnode-02-cubecni-default-cadvisor-7f67df57bd-rjf9g查看进程,这几个容器都属于同一个kata容器进程,说明cadvisor容器和nginx容器位于同一个kata虚机中。

[ctgcdt@eaci-2 kata-containers]$ sudo crictl inspect 6ad795788a5a0 |grep "cgroupsPath" -A 10

"cgroupsPath": "kubepods-burstable-podc80432ca_441d_44a7_9863_e79cb5d50e7f.slice:cri-containerd:6ad795788a5a010866ee1c9af17e5db1fd475f84f2b43acc70d42b6f52dea7ca",

"namespaces": [

{

"type": "pid"

},

{

"type": "ipc",

"path": "/proc/53363/ns/ipc"

},

[ctgcdt@eaci-2 kata-containers]$ sudo crictl inspect 2a6ccbc5457d3 |grep "cgroupsPath" -A 10

"cgroupsPath": "kubepods-burstable-podc80432ca_441d_44a7_9863_e79cb5d50e7f.slice:cri-containerd:2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09",

"namespaces": [

{

"type": "pid"

},

{

"type": "ipc",

"path": "/proc/53363/ns/ipc"

},

[ctgcdt@eaci-2 kata-containers]$ ps -ef|grep 53363

ctgcdt 9914 26248 0 15:45 pts/0 00:00:00 grep --color=auto 53363

root 53363 53342 1 12:54 ? 00:02:09 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/8b855dee7ae527aa6cff74eed2637aad8d3d3cc2e2d23a78de12fa2e4cab745c/clh-api.sock

[ctgcdt@eaci-2 kata-containers]$ ps -ef|grep 53342

ctgcdt 10777 26248 0 15:46 pts/0 00:00:00 grep --color=auto 53342

root 53342 1 0 12:54 ? 00:00:25 /opt/kata/bin/containerd-shim-kata-v2 -namespace k8s.io -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id 8b855dee7ae527aa6cff74eed2637aad8d3d3cc2e2d23a78de12fa2e4cab745c -debug

root 53362 53342 0 12:54 ? 00:00:00 /opt/kata/libexec/virtiofsd --syslog -o cache=auto -o no_posix_lock -o source=/run/kata-containers/shared/sandboxes/8b855dee7ae527aa6cff74eed2637aad8d3d3cc2e2d23a78de12fa2e4cab745c/shared --fd=3 -f --thread-pool-size=1 -o announce_submounts

root 53363 53342 1 12:54 ? 00:02:09 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/8b855dee7ae527aa6cff74eed2637aad8d3d3cc2e2d23a78de12fa2e4cab745c/clh-api.sock

上面显示每个容器的cgroup路径都是唯一的,但是在主机下并没有该容器的cgroup的路径。

根据sandboxid进入kata虚机,查看虚机下容器的cgroup路径,发现就是上面容器的croup路径

[ctgcdt@eaci-2 szj]$ sudo /opt/kata/bin/kata-runtime exec 8b855dee7ae527aa6cff74eed2637aad8d3d3cc2e2d23a78de12fa2e4cab745c

root@localhost:/# find /sys/fs/cgroup/ -name "2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09*"

/sys/fs/cgroup/blkio/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/freezer/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/memory/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/net_cls,net_prio/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/cpuset/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/pids/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/perf_event/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/cpu,cpuacct/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/devices/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09

/sys/fs/cgroup/systemd/cri-containerd/2a6ccbc5457d3a8e2efc2d41b90cae2c9c7ed1709efe318b5df19adabfd67c09以kata容器方式部署cAdvisor,cAdvisor是以一个kata容器的方式跑起来的,查看容器日志报如下错误:

E0710 01:21:45.916388 1 info.go:140] Failed to get system UUID: open /etc/machine-id: no such file or directory

W0710 01:21:45.917063 1 info.go:53] Couldn't collect info from any of the files in "/etc/machine-id,/var/lib/dbus/machine-id"

W0710 01:21:46.011388 1 manager.go:349] Could not configure a source for OOM detection, disabling OOM events: open /dev/kmsg: no such file or directorycadvisor获取不到machine-id,但是查看kata容器的虚机,其实是有/etc/machine-id的。进入cadvisor容器控制台,发现cadvisor容器内部是没有/etc/machine-id的。

上面虽然报错,但还是可以继续

上面在一个pod中创建了2个容器,一个cadvisor,一个nginx。我们可以在nginx容器中curl cadvisor的服务,来验证cadvisor获取的指标数据。进入nginx容器,curl访问cadvisor的端口

[ctgcdt@eaci-2 kata-containers]$ sudo crictl exec -it 6ad795788a5a0 sh

# curl localhost:8080/metrics

# HELP cadvisor_version_info A metric with a constant '1' value labeled by kernel version, OS version, docker version, cadvisor version & cadvisor revision.

# TYPE cadvisor_version_info gauge

cadvisor_version_info{cadvisorRevision="8949c822",cadvisorVersion="v0.32.0",dockerVersion="Unknown",kernelVersion="5.10.0",osVersion="Alpine Linux v3.7"} 1

# HELP container_cpu_cfs_periods_total Number of elapsed enforcement period intervals.

# TYPE container_cpu_cfs_periods_total counter

container_cpu_cfs_periods_total{id="/"} 11103

# HELP container_cpu_cfs_throttled_periods_total Number of throttled period intervals.

# TYPE container_cpu_cfs_throttled_periods_total counter

container_cpu_cfs_throttled_periods_total{id="/"} 0

# HELP container_cpu_cfs_throttled_seconds_total Total time duration the container has been throttled.

# TYPE container_cpu_cfs_throttled_seconds_total counter

container_cpu_cfs_throttled_seconds_total{id="/"} 0

# HELP container_cpu_load_average_10s Value of container cpu load average over the last 10 seconds.

# TYPE container_cpu_load_average_10s gauge

container_cpu_load_average_10s{id="/"} 0

# HELP container_cpu_system_seconds_total Cumulative system cpu time consumed in seconds.

# TYPE container_cpu_system_seconds_total counter

container_cpu_system_seconds_total{id="/"} 4.1

# HELP container_cpu_usage_seconds_total Cumulative cpu time consumed in seconds.

# TYPE container_cpu_usage_seconds_total counter

container_cpu_usage_seconds_total{cpu="cpu00",id="/"} 4.391837705

container_cpu_usage_seconds_total{cpu="cpu01",id="/"} 3.694253569

# HELP container_cpu_user_seconds_total Cumulative user cpu time consumed in seconds.

# TYPE container_cpu_user_seconds_total counter

container_cpu_user_seconds_total{id="/"} 3.09

# HELP container_fs_inodes_free Number of available Inodes

# TYPE container_fs_inodes_free gauge

container_fs_inodes_free{device="none",id="/"} 5.7819541e+07

container_fs_inodes_free{device="shm",id="/"} 633115

container_fs_inodes_free{device="tmpfs",id="/"} 633100

# HELP container_fs_inodes_total Number of Inodes

# TYPE container_fs_inodes_total gauge

container_fs_inodes_total{device="none",id="/"} 5.8515456e+07

container_fs_inodes_total{device="shm",id="/"} 633116

container_fs_inodes_total{device="tmpfs",id="/"} 633116

令人意外的是,cadvisor获取的并不是kata虚机中容器的指标数据,而是自身容器的数据。

对比普通k8s集群的cadvisor的pod的yaml和集群的cadvisor的pod的yaml,发现volumes并没有mount过去,所以cadvisor获取不到kata虚机中的cgroup。

7)直接以kata容器的方式将cadvisor容器部署到集群,不经过控制台。

怀疑是创建cadvisor容器时挂载宿主机根目录的问题,kata容器无法挂载整个/目录。将cadvisor.yaml中的挂载rootfs根目录去掉,再重新创建,如下

只需要在cadvisor.yaml中的spec.template.spec中添加runtimeClassName=kata即可,即

apiVersion: "apps/v1"

kind: "Deployment"

spec:

template:

spec:

runtimeClassName: "kata"使用kubectl创建cadvisor pod

[ctgcdt@eaci-2 szj]$ kubectl create -f cadvisor.yaml

deployment.apps/cadvisor-1 created

[ctgcdt@eaci-2 szj]$ kubectl get pod -A |grep cadvisor

cadvisor cadvisor-1-8489d659f4-gh2ss 1/2 RunContainerError 2 (2s ago) 21s发现cadvisor容器起不来

[ctgcdt@eaci-2 szj]$ kubectl describe pod cadvisor-1-8489d659f4-gh2ss -ncadvisor

Name: cadvisor-1-8489d659f4-gh2ss

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m12s default-scheduler Successfully assigned cadvisor/cadvisor-1-8489d659f4-gh2ss to eaci-2

Normal Pulled 2m11s kubelet Container image "registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-01:1.22.0" already present on machine

Normal Created 2m11s kubelet Created container container1-nginx

Normal Started 2m11s kubelet Started container container1-nginx

Warning Failed 88s (x4 over 2m10s) kubelet Error: failed to create containerd task: failed to create shim: No such file or directory (os error 2): unknown

Warning BackOff 50s (x8 over 2m8s) kubelet Back-off restarting failed container

Normal Pulled 38s (x5 over 2m11s) kubelet Container image "registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-04-cadvisor:latest" already present on machine

Normal Created 38s (x5 over 2m10s) kubelet Created container cadvisor-1查看kubelet的日志

[ctgcdt@eaci-2 szj]$ sudo journalctl -xeu kubelet --no-pager |grep "failed to create shim"

Jul 12 19:32:24 eaci-2 kubelet[29279]: E0712 19:32:24.750755 29279 remote_runtime.go:453] "StartContainer from runtime service failed" err="rpc error: code = Unknown desc = failed to create containerd task: failed to create shim: No such file or directory (os error 2): unknown" containerID="3824cf50b53951bacc9c1ff455126ecc9b821be56ac241782bd2b8916880213a"

Jul 12 19:32:24 eaci-2 kubelet[29279]: E0712 19:32:24.751238 29279 kuberuntime_manager.go:905] container &Container{Name:cadvisor-1,Image:registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-04-cadvisor:latest,Command:[],Args:[],WorkingDir:,Ports:[]ContainerPort{ContainerPort{Name:test,HostPort:0,ContainerPort:8080,Protocol:TCP,HostIP:,},},Env:[]EnvVar{},Resources:ResourceRequirements{Limits:ResourceList{cpu: {{800 -3} {<nil>} 800m DecimalSI},memory: {{2097152000 0} {<nil>} BinarySI},},Requests:ResourceList{cpu: {{400 -3} {<nil>} 400m DecimalSI},memory: {{419430400 0} {<nil>} BinarySI},},},VolumeMounts:[]VolumeMount{VolumeMount{Name:rootfs,ReadOnly:true,MountPath:/rootfs,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:var-run,ReadOnly:true,MountPath:/var/run,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:sys,ReadOnly:true,MountPath:/sys,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:docker,ReadOnly:true,MountPath:/var/lib/docker,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:disk,ReadOnly:true,MountPath:/dev/disk,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:cgroup,ReadOnly:true,MountPath:/sys/fs/cgroup/,SubPath:,MountPropagation:nil,SubPathExpr:,},VolumeMount{Name:machineid,ReadOnly:true,MountPath:/etc/machine-id,SubPath:,MountPropagation:nil,SubPathExpr:,},},LivenessProbe:nil,ReadinessProbe:nil,Lifecycle:nil,TerminationMessagePath:/dev/termination-log,ImagePullPolicy:IfNotPresent,SecurityContext:nil,Stdin:false,StdinOnce:false,TTY:false,EnvFrom:[]EnvFromSource{},TerminationMessagePolicy:File,VolumeDevices:[]VolumeDevice{},StartupProbe:nil,} start failed in pod cadvisor-1-8489d659f4-gh2ss_cadvisor(fc6e307f-b4e5-4fe8-bbec-74d7a264320b): RunContainerError: failed to create containerd task: failed to create shim: No such file or directory (os error 2): unknown

Jul 12 19:32:24 eaci-2 kubelet[29279]: E0712 19:32:24.751306 29279 pod_workers.go:951] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"cadvisor-1\" with RunContainerError: \"failed to create containerd task: failed to create shim: No such file or directory (os error 2): unknown\"" pod="cadvisor/cadvisor-1-8489d659f4-gh2ss" podUID=fc6e307f-b4e5-4fe8-bbec-74d7a264320b

Jul 12 19:32:26 eaci-2 kubelet[29279]: E0712 19:32:26.367264 29279 remote_runtime.go:453] "StartContainer from runtime service failed" err="rpc error: code = Unknown desc = failed to create containerd task: failed to create shim: No such file or directory (os error 2): unknown" containerID="f412b8a748ccd441af376a535df6556c86957f34c2458374ff191d19423bc792"

...

volumeMounts:

- name: var-run

mountPath: /var/run

readOnly: true

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

- name: cgroup

mountPath: /sys/fs/cgroup/

readOnly: true

- name: machineid

mountPath: /etc/machine-id

readOnly: true

volumes:

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /var/lib/docker

- name: cgroup

hostPath:

path: /sys/fs/cgroup/

- name: machineid

hostPath:

path: /etc/machine-id重新创建后cadvisor容器能成功运行

[ctgcdt@eaci-2 szj]$ kubectl create -f cadvisor.yaml

deployment.apps/cadvisor-1 created

[ctgcdt@eaci-2 szj]$ kubectl get pod -ncadvisor

NAME READY STATUS RESTARTS AGE

cadvisor-1-fffb74dc5-gbnb5 2/2 Running 0 3s

[ctgcdt@eaci-2 szj]$ sudo crictl ps | grep cadvisor-1-fffb74dc5-gbnb5

74289a3a719b0 eb12107075737 33 seconds ago Running cadvisor-1 0 af3e24f8d7859 cadvisor-1-fffb74dc5-gbnb5

36013eaf6ac7c b3c5c59017fbb 33 seconds ago Running container1-nginx 0 af3e24f8d7859 cadvisor-1-fffb74dc5-gbnb5查看容器的挂载目录,发现只有主机的mechine-id、run、docker和cadvisor-v0.47.0-linux-amd64(后来增加了个挂载测试)能挂载进去,cgroup、/var/run并没有挂载进去。

查看cadvisor容器确实挂载了machine-id,所以在容器中看到的是宿主机的machine-id,但cgroup却是虚拟机的。

[ctgcdt@eaci-2 szj]$ sudo crictl exec -it 74289a3a719b0 sh

/ # cat /etc/machine-id

2ebc452f4c634d8196d17d7359957561查看eaci-2宿主机的machine-id

[ctgcdt@eaci-2 kata-monitor.service]$ cat /etc/machine-id

2ebc452f4c634d8196d17d7359957561查看kata虚拟机的machine-id

[ctgcdt@eaci-2 szj]$ sudo crictl inspect 74289a3a719b0 |grep "cgroupsPath" -A 8

"cgroupsPath": "kubepods-burstable-pod30dc6265_dc59_462c_9d31_46df5f6d1472.slice:cri-containerd:74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5",

"namespaces": [

{

"type": "pid"

},

{

"type": "ipc",

"path": "/proc/55048/ns/ipc"

},

[ctgcdt@eaci-2 szj]$ ps -ef|grep 55048

ctgcdt 30838 26248 0 19:50 pts/0 00:00:00 grep --color=auto 55048

root 55048 55030 0 19:45 ? 00:00:02 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918/clh-api.sock

[ctgcdt@eaci-2 szj]$ ps -ef|grep 55030

ctgcdt 32403 26248 0 19:50 pts/0 00:00:00 grep --color=auto 55030

root 55030 1 0 19:45 ? 00:00:01 /opt/kata/bin/containerd-shim-kata-v2 -namespace k8s.io -address /run/containerd/containerd.sock -publish-binary /usr/bin/containerd -id af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918 -debug

root 55047 55030 0 19:45 ? 00:00:00 /opt/kata/libexec/virtiofsd --syslog -o cache=auto -o no_posix_lock -o source=/run/kata-containers/shared/sandboxes/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918/shared --fd=3 -f --thread-pool-size=1 -o announce_submounts

root 55048 55030 0 19:45 ? 00:00:02 /opt/kata/bin/cloud-hypervisor-upcall --api-socket /run/vc/vm/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918/clh-api.sock

[ctgcdt@eaci-2 szj]$ sudo /opt/kata/bin/kata-runtime exec af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918

root@localhost:/# cat /etc/machine-id

3f2af7ce78a542e0b47e8457df5320ae发现cadvisor容器中挂载的是宿主机的machine-id,而不是kata虚拟机的machine-id。

进入另一个nginx容器,使用curl访问cadvisor的8080端口,看是否能获取到容器的指标数据

[ctgcdt@eaci-2 szj]$ sudo crictl exec -it 36013eaf6ac7c sh

# curl localhost:8080/metrics

# HELP cadvisor_version_info A metric with a constant '1' value labeled by kernel version, OS version, docker version, cadvisor version & cadvisor revision.

# TYPE cadvisor_version_info gauge

cadvisor_version_info{cadvisorRevision="8949c822",cadvisorVersion="v0.32.0",dockerVersion="Unknown",kernelVersion="5.10.0",osVersion="Alpine Linux v3.7"} 1

# HELP container_cpu_cfs_periods_total Number of elapsed enforcement period intervals.

# TYPE container_cpu_cfs_periods_total counter

container_cpu_cfs_periods_total{id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 13

container_cpu_cfs_periods_total{id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 370

# HELP container_cpu_cfs_throttled_periods_total Number of throttled period intervals.

# TYPE container_cpu_cfs_throttled_periods_total counter

container_cpu_cfs_throttled_periods_total{id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 6

container_cpu_cfs_throttled_periods_total{id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 0

# HELP container_cpu_cfs_throttled_seconds_total Total time duration the container has been throttled.

# TYPE container_cpu_cfs_throttled_seconds_total counter

container_cpu_cfs_throttled_seconds_total{id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 0.299155167

container_cpu_cfs_throttled_seconds_total{id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 0

# HELP container_cpu_load_average_10s Value of container cpu load average over the last 10 seconds.

# TYPE container_cpu_load_average_10s gauge

container_cpu_load_average_10s{id="/"} 0

container_cpu_load_average_10s{id="/cri-containerd"} 0

container_cpu_load_average_10s{id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 0

container_cpu_load_average_10s{id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 0

container_cpu_load_average_10s{id="/cri-containerd/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918"} 0

container_cpu_load_average_10s{id="/system.slice"} 0

container_cpu_load_average_10s{id="/system.slice/chrony.service"} 0

container_cpu_load_average_10s{id="/system.slice/kata-agent.service"} 0

# HELP container_cpu_system_seconds_total Cumulative system cpu time consumed in seconds.

# TYPE container_cpu_system_seconds_total counter

container_cpu_system_seconds_total{id="/"} 0.59

container_cpu_system_seconds_total{id="/cri-containerd"} 0.25

container_cpu_system_seconds_total{id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 0.06

container_cpu_system_seconds_total{id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 0.17

container_cpu_system_seconds_total{id="/cri-containerd/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918"} 0

container_cpu_system_seconds_total{id="/system.slice"} 0

container_cpu_system_seconds_total{id="/system.slice/chrony.service"} 0

container_cpu_system_seconds_total{id="/system.slice/kata-agent.service"} 0

# HELP container_cpu_usage_seconds_total Cumulative cpu time consumed in seconds.

# TYPE container_cpu_usage_seconds_total counter

container_cpu_usage_seconds_total{cpu="cpu00",id="/"} 1.015071982

container_cpu_usage_seconds_total{cpu="cpu00",id="/cri-containerd"} 0.498926108

container_cpu_usage_seconds_total{cpu="cpu00",id="/cri-containerd/36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08"} 0.083218552

container_cpu_usage_seconds_total{cpu="cpu00",id="/cri-containerd/74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5"} 0.412888108

container_cpu_usage_seconds_total{cpu="cpu00",id="/cri-containerd/af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918"} 0.001918424这时,cadvisor能正常获取到kata虚拟机中3个container的指标数据,通过对比宿主机的pod的信息,3个containerid分别是container1-nginx、cadvisor1和sandbox(pause容器)的指标数据。

宿主机的pod的信息如下:

[ctgcdt@eaci-2 szj]$ kubectl get pod cadvisor-1-fffb74dc5-gbnb5 -ncadvisor -oyaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918

...

spec:

...

containerStatuses:

- containerID: containerd://74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5

image: registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-04-cadvisor:latest

...

- containerID: containerd://36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08

image: registry-vpc.guizhouDev.ccr.ctyun.com:32099/temp-del/zhx-01:1.22.0

...查看kata虚拟机下cgroup的目录:

[ctgcdt@eaci-2 szj]$ sudo /opt/kata/bin/kata-runtime exec af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918

root@localhost:/# ls /sys/fs/cgroup/blkio/cri-containerd/

36013eaf6ac7c19f93dca8394ffb5350dd9cf08294b4e57d479696775c9e8e08

74289a3a719b0af41d366a9905d1d5cb37f79133e310744dd7a5664fb7a993f5

af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918

blkio.reset_stats

blkio.throttle.io_service_bytes

blkio.throttle.io_service_bytes_recursive

blkio.throttle.io_serviced

blkio.throttle.io_serviced_recursive

blkio.throttle.read_bps_device

blkio.throttle.read_iops_device

blkio.throttle.write_bps_device

blkio.throttle.write_iops_device

cgroup.clone_children

cgroup.procs

notify_on_release

tasks

root@localhost:/# exit查看eaci-2宿主机的cgroup目录

[ctgcdt@eaci-2 kata-monitor.service]$ ls /sys/fs/cgroup/blkio

blkio.io_merged blkio.weight

blkio.io_merged_recursive blkio.weight_device

blkio.io_queued cgroup.clone_children

blkio.io_queued_recursive cgroup.procs

blkio.io_service_bytes cgroup.sane_behavior

blkio.io_service_bytes_recursive kata_overhead

blkio.io_serviced kubepods-besteffort-pod2c1e9612_3136_47b9_a86d_a4e08aa53bd1.slice:cri-containerd:ad803a3a6b5653aa8e3532f584d748032589ff08e9fea4f96200657f5620712d

blkio.io_serviced_recursive kubepods-besteffort-pod330c9ee6_5f44_47ab_adc7_27ef7326a71c.slice:cri-containerd:e28700e7d9425b717807b34c0f727478d5f0976ebcb2c6ba419bfdc2ea0a045f

blkio.io_service_time kubepods-besteffort-pod662e97d0_6e01_4070_b4b2_8c602e8f0562.slice:cri-containerd:eb0eaa378890bfe35fe7bb6679d583bb42d3ce57f96d6740b54de3c6e945ed00

blkio.io_service_time_recursive kubepods-besteffort-pod73d081a7_4d33_4894_8202_3be553aa8346.slice:cri-containerd:39c289e8705ebc31a3cf1452af1871c432775a9461e68fa3233ad369f6061c11

blkio.io_wait_time kubepods-burstable-pod1db6ef27_f319_4ea5_9c1f_a3f29a262014.slice:cri-containerd:cec9918a94ff034ac0bbc4289e66bb2cfc7bf15828f77fe6a9448769f02d4a1e

blkio.io_wait_time_recursive kubepods-burstable-pod30dc6265_dc59_462c_9d31_46df5f6d1472.slice:cri-containerd:af3e24f8d7859884f7508e8eaf57b6854b294aa1e527933aa8aff16bfa6ed918

blkio.leaf_weight kubepods-burstable-pod3910d940_affb_46bf_8695_3919948b8c1a.slice:cri-containerd:81403f92bbb74315a543fe264a7c1e681687582db2751db4cd4e9fe953be2cde

blkio.leaf_weight_device kubepods-burstable-pod4af44abf_5844_45ef_8b54_e7082be462b8.slice:cri-containerd:c8277059ba59b85c0276859f6e2c964cbb8999568f50391fbbabf36bd13ca863

blkio.reset_stats kubepods-burstable-pod545f3060_9ae3_4c05_8d95_c3b3a9456eaa.slice:cri-containerd:ad96d95e7679aa32aee9740bb79ed4903990d8a6759922d40ed7e7164e0de320

blkio.sectors kubepods-burstable-podbb10f084_5655_4f36_bc8f_83324807ddd9.slice:cri-containerd:e605244a1e7cfd45be8a11ebb3cafbd559cd75debb355281921373de762720ff

blkio.sectors_recursive kubepods-burstable-podfe60ea2b_190f_45fd_892b_bee49b7b2602.slice:cri-containerd:451e1ded50bb6ad3db101af4c6d02727a79b3df0821a0fe0ff451e4bbbf65351

blkio.throttle.io_service_bytes kubepods.slice

blkio.throttle.io_service_bytes_recursive machine.slice

blkio.throttle.io_serviced notify_on_release

blkio.throttle.io_serviced_recursive release_agent

blkio.throttle.read_bps_device systemd

blkio.throttle.read_iops_device system.slice

blkio.throttle.write_bps_device tasks

blkio.throttle.write_iops_device user.slice

blkio.time vc

blkio.time_recursive以上可知,cadvisor容器中挂载的cgroup是kata虚拟机中的cgroup路径,但是machine-id挂载的又是宿主机的machine-id。

8)总结:

1、通过集群节点的kubelet获取cadvisor指标数据,只能获取pod的指标数据,无法获取pod中容器的指标数据,因为在集群节点上只能看到kata 容器的相关进程信息(即sandbox信息),没有pod中容器的cgroup信息;

2、通过控制台创建kata容器类型的cadvisor容器,无法获取到容器的指标数据,因为通过控制台创kata容器,无法挂载hostpath,导致cadvisor容器中无法读取容器的cgroup信息。

3、通过集群直接创建runc容器类型的cadvisor容器,无法正常运行,因为集群中存在kata容器类型,又存在runc容器类型,调用cadvisor的metrics接口会导致cadvisor崩溃重启。

4、通过集群直接创建kata容器类型的cadvisor容器,能够正确获取到容器的指标数据,但只有容器id信息,无法获取到容器名称。

5、通过在kata虚拟机中直接以二进制方式运行cadvisor程序,能够正确获取到容器的指标数据,指标数据与第4点基本一致,但只有容器id信息,无法获取到容器名称。

6、通过在普通k8s集群节点的kubelet获取cadvisor指标数据,能够正确获取容器的指标数据,包括容器名称、容器id、镜像路径、命名空间、pod名称等信息。