云网络场景下,租户发出的报文经过vxlan隧道封装后,从计算节点发出到网络节点。网络节点的nat网关对租户的vxlan报文解隧道后,根据nat配置进行处理和转发。这个流量路径上,网络节点上的主要的报文处理包括,vxlan解隧道、connection tracking和跨numa转发,这是考验性能的两个环节。这里的性能包括内存读写性能和cpu报文处理性能。

这里,我们的测试打流工具使用 dperf,该工具基于dpdk,能实现线速的软件打流能力。

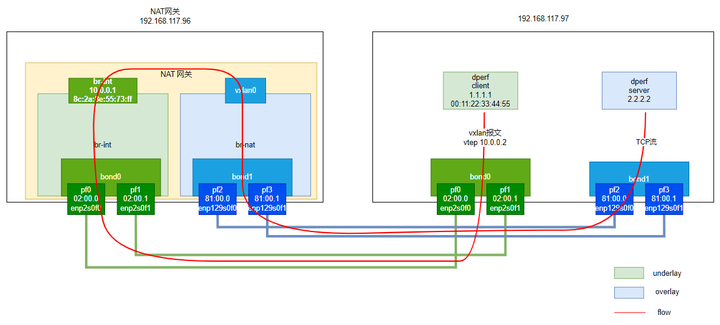

测试网络拓扑如下:

网络节点的配置如下。其内容主要是ovs启动与配置。

#!/bin/bash

systemctl stop openvswitch

rm /etc/openvswitch/conf.db

systemctl restart openvswitch

LOCAL_VTEP_IP=10.0.0.1

REMOTE_VTEP_IP="flow"

# REMOTE_VTEP_IP=10.0.0.2

bond0pci="0000:02:00.0,0000:02:00.1"

bond1pci="0000:81:00.0"

vni="1000"

# bond1pci="0000:81:00.0,0000:81:00.1"

#Start ovsdb_server

#Config and start ovs-vswitchd

ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-init=true;

#ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-lcore-mask="0xfff0000000000000";

#ovs-vsctl --no-wait set Open_vSwitch . other_config:n-handler-threads=16

#ovs-vsctl --no-wait set Open_vSwitch . other_config:n-revalidator-threads=16

ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="0,0,0,0";

# ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-socket-mem="10240,10240";

ovs-vsctl --no-wait set Open_vSwitch . other_config:max-idle="60000";

ovs-vsctl --no-wait set Open_vSwitch . other_config:pmd-cpu-mask="0x0000ffffffffffff0000ffffffffffff";

ovs-vsctl --no-wait init;

#Config bridge

ovs-vsctl --may-exist add-br br-nat -- set bridge br-nat datapath_type=netdev

ovs-vsctl --may-exist add-br br-int -- set bridge br-int datapath_type=netdev

ovs-vsctl --may-exist add-port br-int bond0 -- set Interface bond0 type=dpdk \

options:dpdk-devargs=$bond0pci options:n_rxq=32 \

options:dpdkbond-mode=active_backup

ovs-vsctl --may-exist add-port br-nat bond1 -- set Interface bond1 type=dpdk \

options:dpdk-devargs=$bond1pci options:n_rxq=32 \

options:dpdkbond-mode=active_backup

ifconfig br-int up

ifconfig br-int $LOCAL_VTEP_IP/24

ovs-vsctl --may-exist add-port br-nat vxlan0 -- set interface vxlan0 type=vxlan \

options:local_ip=$LOCAL_VTEP_IP \

options:remote_ip=$REMOTE_VTEP_IP \

options:key=$vni

# config flow

for i in $(seq 0 1 70); do

ovs-ofctl add-flow br-nat "priority=100,table=0,in_port=bond1,ip,nw_src=2.2.2.$i,action=set_field:10.0.0.${i}->tun_dst,resubmit(,1)"

done

ovs-ofctl add-flow br-nat "table=0,priority=10,action=resubmit(,1)"

# ct state

ovs-ofctl add-flow br-nat "table=1,priority=10,ip,ct_state=-trk,action=ct(table=2)"

ovs-ofctl add-flow br-nat "table=2,in_port=vxlan0,ip,ct_state=+trk+new,action=ct(commit),output:bond1"

ovs-ofctl add-flow br-nat "table=2,in_port=bond1,ip,ct_state=+trk+new,action=ct(commit),output:vxlan0"

ovs-ofctl add-flow br-nat table=2,in_port=vxlan0,ip,ct_state=+trk+est,action=output:bond1

ovs-ofctl add-flow br-nat table=2,in_port=bond1,ip,ct_state=+trk+est,action=output:vxlan0

ovs-ofctl add-flow br-int in_port=br-int,action=output:bond0

ovs-ofctl add-flow br-int in_port=bond0,action=output:br-int

dperf client端配置如下:

mode client

cpu 0-63

socket_mem 16300,0

tx_burst 64

payload_size 1

launch_num 1

payload_size 1

duration 2d

protocol http

# keepalive 1ms 5

# cps 130000

# cps 210000

cps 210000

# cps 10000

#port pci addr gateway(ovs-vtep) outer-dmac(ovs-vtep)

port 0000:02:00.0 10.0.0.2 10.0.0.1 8c:2a:8e:55:73:ff

#vxlan vni inner-smac inner-dmac vtep-local num vtep-remote(ovs) num

vxlan 1000 00:11:22:33:44:55 8c:2a:8e:55:73:97 10.0.0.2 64 10.0.0.1 1

# addr_start num

client 1.1.1.1 1

server 2.2.2.2 64

listen 80 1

dperf server端配置如下:

mode server

cpu 64-127

socket_mem 0,10240

duration 1d

#payload_size 1

protocol http

#port pci addr gateway(target ip) target-mac

port 0000:81:00.0 2.2.2.2 1.1.1.1 00:11:22:33:44:55

#port 0000:81:00.0 2.2.2.2 10.0.0.1 8c:2a:8e:55:73:ff

client 1.1.1.1 1

server 2.2.2.2 64

listen 80 1