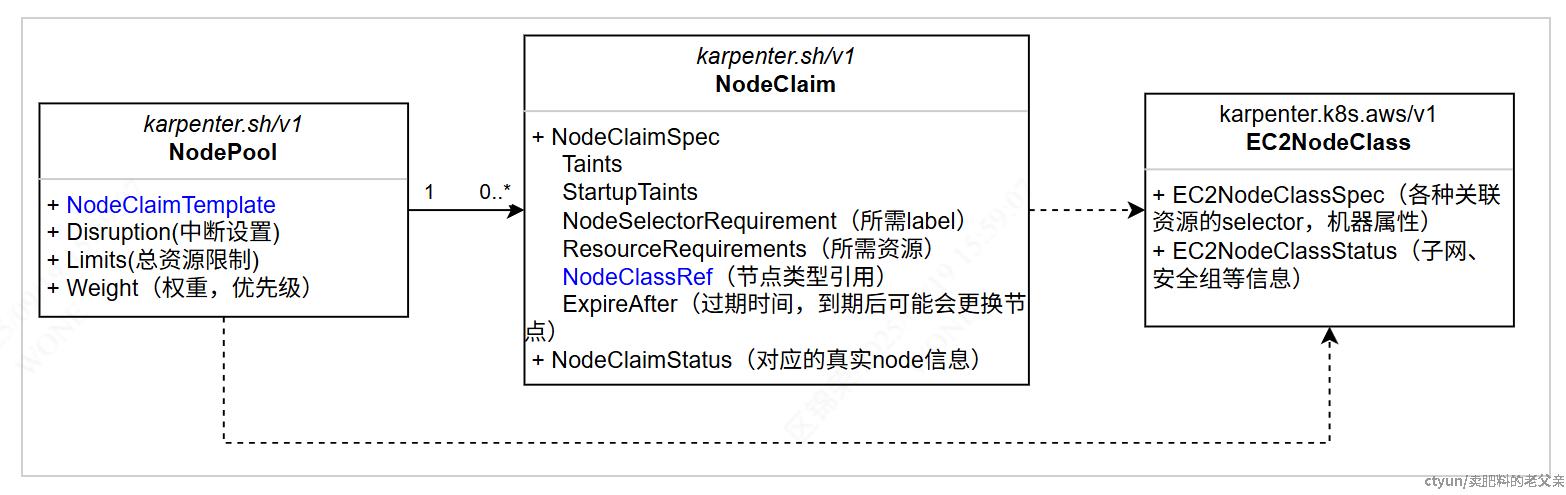

用户需要定义NodePool和EC2NodeClass(或者其他云厂的NodeClass),NodeClaim是Karpenter生成的。

NodePool 是一个逻辑集合,表示一组节点的配置需求。它描述了一类节点的属性,如 CPU、内存、区域拓扑等(刚才示例没有体现),另外还指定了将会apply到要创建的节点上的污点。

NodeClaim 是 Karpenter 用于描述对节点的需求的资源。它可以被看作是对具体节点的请求,定义了 Pod 对于节点的特定需求。这是一个内部资源定义,一般情况下用户是不用关注NodeClaim的。

当有扩容需求时,Karpenter会基于现有的NodePool中选择最适合需求的一个,然后基于这个NodePool生成兼容当前需求的NodeClaim。

NodePool

首次安装 Karpenter 时,会设置一个默认 NodePool。NodePool 对 Karpenter 可以创建的节点以及可以在这些节点上运行的 Pod 进行约束。NodePool 可以主要有这些设置:

- 定义污点和启动污点

- 将节点创建限制在某些区域、实例类型和计算机架构内

- 设置节点默认过期时间

您可以更改您的 NodePool 或将其他 NodePool 添加到 Karpenter。以下是需要了解的有关 NodePool 的信息:

- 如果没有配置 NodePool,Karpenter 将不会执行任何操作。

- 每个配置的 NodePool 都由 Karpenter 循环遍历。

- 如果 Karpenter 在 NodePool 中遇到 Pod 无法容忍的污点,Karpenter 将不会使用该 NodePool 来供应 pod。

- 如果 Karpenter 发现 NodePool 中发现启动污点,会把污点apply到已完成配置的节点,但 pod 不需要容忍该污点。Karpenter 认为该污点是暂时的,其他系统将删除该污点。

- 建议创建互斥的 NodePool。因此,任何 Pod 都不应匹配多个 NodePool。如果匹配多个 NodePool,Karpenter 将使用权重最高的 NodePool 。

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

# Template section that describes how to template out NodeClaim resources that Karpenter will provision

# Karpenter will consider this template to be the minimum requirements needed to provision a Node using this NodePool

# It will overlay this NodePool with Pods that need to schedule to further constrain the NodeClaims

# Karpenter will provision to launch new Nodes for the cluster

template:

metadata:

# Labels are arbitrary key-values that are applied to all nodes

labels:

billing-team: my-team

# Annotations are arbitrary key-values that are applied to all nodes

annotations:

example.com/owner: "my-team"

spec:

# References the Cloud Provider's NodeClass resource, see your cloud provider specific documentation

nodeClassRef:

group: karpenter.k8s.aws # Updated since only a single version will be served

kind: EC2NodeClass

name: default

# Provisioned nodes will have these taints

# Taints may prevent pods from scheduling if they are not tolerated by the pod.

taints:

- key: example.com/special-taint

effect: NoSchedule

# Provisioned nodes will have these taints, but pods do not need to tolerate these taints to be provisioned by this

# NodePool. These taints are expected to be temporary and some other entity (e.g. a DaemonSet) is responsible for

# removing the taint after it has finished initializing the node.

startupTaints:

- key: example.com/another-taint

effect: NoSchedule

# The amount of time a Node can live on the cluster before being removed

# Avoiding long-running Nodes helps to reduce security vulnerabilities as well as to reduce the chance of issues that can plague Nodes with long uptimes such as file fragmentation or memory leaks from system processes

# You can choose to disable expiration entirely by setting the string value 'Never' here

# Note: changing this value in the nodepool will drift the nodeclaims.

expireAfter: 720h | Never

# The amount of time that a node can be draining before it's forcibly deleted. A node begins draining when a delete call is made against it, starting

# its finalization flow. Pods with TerminationGracePeriodSeconds will be deleted preemptively before this terminationGracePeriod ends to give as much time to cleanup as possible.

# If your pod's terminationGracePeriodSeconds is larger than this terminationGracePeriod, Karpenter may forcibly delete the pod

# before it has its full terminationGracePeriod to cleanup.

# Note: changing this value in the nodepool will drift the nodeclaims.

terminationGracePeriod: 48h

# Requirements that constrain the parameters of provisioned nodes.

# These requirements are combined with pod.spec.topologySpreadConstraints, pod.spec.affinity.nodeAffinity, pod.spec.affinity.podAffinity, and pod.spec.nodeSelector rules.

# Operators { In, NotIn, Exists, DoesNotExist, Gt, and Lt } are supported.

# kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#operators

requirements:

- key: "karpenter.k8s.aws/instance-category"

operator: In

values: ["c", "m", "r"]

# minValues here enforces the scheduler to consider at least that number of unique instance-category to schedule the pods.

# This field is ALPHA and can be dropped or replaced at any time

minValues: 2

- key: "karpenter.k8s.aws/instance-family"

operator: In

values: ["m5","m5d","c5","c5d","c4","r4"]

minValues: 5

- key: "karpenter.k8s.aws/instance-cpu"

operator: In

values: ["4", "8", "16", "32"]

- key: "karpenter.k8s.aws/instance-hypervisor"

operator: In

values: ["nitro"]

- key: "karpenter.k8s.aws/instance-generation"

operator: Gt

values: ["2"]

- key: "topology.kubernetes.io/zone"

operator: In

values: ["us-west-2a", "us-west-2b"]

- key: "kubernetes.io/arch"

operator: In

values: ["arm64", "amd64"]

- key: "karpenter.sh/capacity-type"

operator: In

values: ["spot", "on-demand"]

# Disruption section which describes the ways in which Karpenter can disrupt and replace Nodes

# Configuration in this section constrains how aggressive Karpenter can be with performing operations

# like rolling Nodes due to them hitting their maximum lifetime (expiry) or scaling down nodes to reduce cluster cost

disruption:

# Describes which types of Nodes Karpenter should consider for consolidation

# If using 'WhenEmptyOrUnderutilized', Karpenter will consider all nodes for consolidation and attempt to remove or replace Nodes when it discovers that the Node is empty or underutilized and could be changed to reduce cost

# If using `WhenEmpty`, Karpenter will only consider nodes for consolidation that contain no workload pods

consolidationPolicy: WhenEmptyOrUnderutilized | WhenEmpty

# The amount of time Karpenter should wait to consolidate a node after a pod has been added or removed from the node.

# You can choose to disable consolidation entirely by setting the string value 'Never' here

consolidateAfter: 1m | Never # Added to allow additional control over consolidation aggressiveness

# Budgets control the speed Karpenter can scale down nodes.

# Karpenter will respect the minimum of the currently active budgets, and will round up

# when considering percentages. Duration and Schedule must be set together.

budgets:

- nodes: 10%

# On Weekdays during business hours, don't do any deprovisioning.

- schedule: "0 9 * * mon-fri"

duration: 8h

nodes: "0"

# Resource limits constrain the total size of the pool.

# Limits prevent Karpenter from creating new instances once the limit is exceeded.

limits:

cpu: "1000"

memory: 1000Gi

# Priority given to the NodePool when the scheduler considers which NodePool

# to select. Higher weights indicate higher priority when comparing NodePools.

# Specifying no weight is equivalent to specifying a weight of 0.

weight: 10

status:

conditions:

- type: Initialized

status: "False"

observedGeneration: 1

lastTransitionTime: "2024-02-02T19:54:34Z"

reason: NodeClaimNotLaunched

message: "NodeClaim hasn't succeeded launch"

resources:

cpu: "20"

memory: "8192Mi"

ephemeral-storage: "100Gi"在这些配置项里,最关键的是requirements。它描述了这批节点的属性范围,karpenter会以这个范围作为参考来选择nodepool来供应pod。

requirements通常会被用来描述规格、区域、计费方式等实例销售品相关的属性。label的范围:karpenter.sh/docs/concepts/scheduling/#well-known-labels

NodeClaim

NodeClaim基本上是基于NodePool.spec.template,另外requirement会比NodePool多一个node.kubernetes.io/instance-type

Name: default-x9wxq

Namespace:

Labels: karpenter.k8s.aws/instance-category=c

karpenter.k8s.aws/instance-cpu=8

karpenter.k8s.aws/instance-cpu-manufacturer=amd

karpenter.k8s.aws/instance-ebs-bandwidth=3170

karpenter.k8s.aws/instance-encryption-in-transit-supported=true

karpenter.k8s.aws/instance-family=c5a

karpenter.k8s.aws/instance-generation=5

karpenter.k8s.aws/instance-hypervisor=nitro

karpenter.k8s.aws/instance-memory=16384

karpenter.k8s.aws/instance-network-bandwidth=2500

karpenter.k8s.aws/instance-size=2xlarge

karpenter.sh/capacity-type=spot

karpenter.sh/nodepool=default

kubernetes.io/arch=amd64

kubernetes.io/os=linux

node.kubernetes.io/instance-type=c5a.2xlarge

topology.k8s.aws/zone-id=usw2-az3

topology.kubernetes.io/region=us-west-2

topology.kubernetes.io/zone=us-west-2c

Annotations: compatibility.karpenter.k8s.aws/cluster-name-tagged: true

compatibility.karpenter.k8s.aws/kubelet-drift-hash: 15379597991425564585

karpenter.k8s.aws/ec2nodeclass-hash: 5763643673275251833

karpenter.k8s.aws/ec2nodeclass-hash-version: v3

karpenter.k8s.aws/tagged: true

karpenter.sh/nodepool-hash: 377058807571762610

karpenter.sh/nodepool-hash-version: v3

API Version: karpenter.sh/v1

Kind: NodeClaim

Metadata:

Creation Timestamp: 2024-08-07T05:37:30Z

Finalizers:

karpenter.sh/termination

Generate Name: default-

Generation: 1

Owner References:

API Version: karpenter.sh/v1

Block Owner Deletion: true

Kind: NodePool

Name: default

UID: 6b9c6781-ac05-4a4c-ad6a-7551a07b2ce7

Resource Version: 19600526

UID: 98a2ba32-232d-45c4-b7c0-b183cfb13d93

Spec:

Expire After: 720h0m0s

Node Class Ref:

Group:

Kind: EC2NodeClass

Name: default

Requirements:

Key: kubernetes.io/arch

Operator: In

Values:

amd64

Key: kubernetes.io/os

Operator: In

Values:

linux

Key: karpenter.sh/capacity-type

Operator: In

Values:

spot

Key: karpenter.k8s.aws/instance-category

Operator: In

Values:

c

m

r

Key: karpenter.k8s.aws/instance-generation

Operator: Gt

Values:

2

Key: karpenter.sh/nodepool

Operator: In

Values:

default

Key: node.kubernetes.io/instance-type

Operator: In

Values:

c3.xlarge

c4.xlarge

c5.2xlarge

c5.xlarge

c5a.xlarge

c5ad.2xlarge

c5ad.xlarge

c5d.2xlarge

Resources:

Requests:

Cpu: 3150m

Pods: 6

Startup Taints:

Effect: NoSchedule

Key: app.dev/example-startup

Taints:

Effect: NoSchedule

Key: app.dev/example

Termination Grace Period: 1h0m0s

Status:

Allocatable:

Cpu: 7910m

Ephemeral - Storage: 17Gi

Memory: 14162Mi

Pods: 58

vpc.amazonaws.com/pod-eni: 38

Capacity:

Cpu: 8

Ephemeral - Storage: 20Gi

Memory: 15155Mi

Pods: 58

vpc.amazonaws.com/pod-eni: 38

Conditions:

Last Transition Time: 2024-08-07T05:38:08Z

Message:

Reason: Consolidatable

Status: True

Type: Consolidatable

Last Transition Time: 2024-08-07T05:38:07Z

Message:

Reason: Initialized

Status: True

Type: Initialized

Last Transition Time: 2024-08-07T05:37:33Z

Message:

Reason: Launched

Status: True

Type: Launched

Last Transition Time: 2024-08-07T05:38:07Z

Message:

Reason: Ready

Status: True

Type: Ready

Last Transition Time: 2024-08-07T05:37:55Z

Message:

Reason: Registered

Status: True

Type: Registered

Image ID: ami-08946d4d49fc3f27b

Node Name: ip-xxx-xxx-xxx-xxx.us-west-2.compute.internal

Provider ID: aws:///us-west-2c/i-01234567890123

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Launched 70s karpenter Status condition transitioned, Type: Launched, Status: Unknown -> True, Reason: Launched

Normal DisruptionBlocked 70s karpenter Cannot disrupt NodeClaim: state node doesn't contain both a node and a nodeclaim

Normal Registered 48s karpenter Status condition transitioned, Type: Registered, Status: Unknown -> True, Reason: Registered

Normal Initialized 36s karpenter Status condition transitioned, Type: Initialized, Status: Unknown -> True, Reason: Initialized

Normal Ready 36s karpenter Status condition transitioned, Type: Ready, Status: Unknown -> True, Reason: ReadyNodeClass

NodeClass支持配置 AWS(或其他云厂) 特定设置。每个 NodePool 必须使用 spec.template.spec.nodeClassRef 引用 EC2NodeClass(或者其他云厂的NodeClass)。多个 NodePool 可能指向同一个 EC2NodeClass。

NodeClass通常配置的是销售品无关的内容(与NodePool不同),比如vpc、子网、安全组、镜像、userdata等通用的选项。

由于aws的资源支持打标签,所以EC2NodeClass的vpc、子网、安全组等选项可以通过label、名称、id来圈定可选范围。

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

nodeClassRef:

group: karpenter.k8s.aws

kind: EC2NodeClass

name: default

---

apiVersion: karpenter.k8s.aws/v1

kind: EC2NodeClass

metadata:

name: default

spec:

kubelet:

podsPerCore: 2

maxPods: 20

systemReserved:

cpu: 100m

memory: 100Mi

ephemeral-storage: 1Gi

kubeReserved:

cpu: 200m

memory: 100Mi

ephemeral-storage: 3Gi

evictionHard:

memory.available: 5%

nodefs.available: 10%

nodefs.inodesFree: 10%

evictionSoft:

memory.available: 500Mi

nodefs.available: 15%

nodefs.inodesFree: 15%

evictionSoftGracePeriod:

memory.available: 1m

nodefs.available: 1m30s

nodefs.inodesFree: 2m

evictionMaxPodGracePeriod: 60

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

cpuCFSQuota: true

clusterDNS: ["10.0.1.100"]

# Optional, dictates UserData generation and default block device mappings.

# May be ommited when using an `alias` amiSelectorTerm, otherwise required.

amiFamily: AL2

# Required, discovers subnets to attach to instances

# Each term in the array of subnetSelectorTerms is ORed together

# Within a single term, all conditions are ANDed

subnetSelectorTerms:

# Select on any subnet that has the "karpenter.sh/discovery: ${CLUSTER_NAME}"

# AND the "environment: test" tag OR any subnet with ID "subnet-09fa4a0a8f233a921"

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}"

environment: test

- id: subnet-09fa4a0a8f233a921

# Required, discovers security groups to attach to instances

# Each term in the array of securityGroupSelectorTerms is ORed together

# Within a single term, all conditions are ANDed

securityGroupSelectorTerms:

# Select on any security group that has both the "karpenter.sh/discovery: ${CLUSTER_NAME}" tag

# AND the "environment: test" tag OR any security group with the "my-security-group" name

# OR any security group with ID "sg-063d7acfb4b06c82c"

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}"

environment: test

- name: my-security-group

- id: sg-063d7acfb4b06c82c

# Optional, IAM role to use for the node identity.

# The "role" field is immutable after EC2NodeClass creation. This may change in the

# future, but this restriction is currently in place today to ensure that Karpenter

# avoids leaking managed instance profiles in your account.

# Must specify one of "role" or "instanceProfile" for Karpenter to launch nodes

role: "KarpenterNodeRole-${CLUSTER_NAME}"

# Optional, IAM instance profile to use for the node identity.

# Must specify one of "role" or "instanceProfile" for Karpenter to launch nodes

instanceProfile: "KarpenterNodeInstanceProfile-${CLUSTER_NAME}"

# Each term in the array of amiSelectorTerms is ORed together

# Within a single term, all conditions are ANDed

amiSelectorTerms:

# Select on any AMI that has both the `karpenter.sh/discovery: ${CLUSTER_NAME}`

# AND `environment: test` tags OR any AMI with the name `my-ami` OR an AMI with

# ID `ami-123`

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}"

environment: test

- name: my-ami

- id: ami-123

# Select EKS optimized AL2023 AMIs with version `v20240703`. This term is mutually

# exclusive and can't be specified with other terms.

# - alias: al2023@v20240703

# Optional, propagates tags to underlying EC2 resources

tags:

team: team-a

app: team-a-app

# Optional, configures IMDS for the instance

metadataOptions:

httpEndpoint: enabled

httpProtocolIPv6: disabled

httpPutResponseHopLimit: 1 # This is changed to disable IMDS access from containers not on the host network

httpTokens: required

# Optional, configures storage devices for the instance

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 100Gi

volumeType: gp3

iops: 10000

encrypted: true

kmsKeyID: "1234abcd-12ab-34cd-56ef-1234567890ab"

deleteOnTermination: true

throughput: 125

snapshotID: snap-0123456789

# Optional, use instance-store volumes for node ephemeral-storage

instanceStorePolicy: RAID0

# Optional, overrides autogenerated userdata with a merge semantic

userData: |

echo "Hello world"

# Optional, configures detailed monitoring for the instance

detailedMonitoring: true

# Optional, configures if the instance should be launched with an associated public IP address.

# If not specified, the default value depends on the subnet's public IP auto-assign setting.

associatePublicIPAddress: true

status:

# Resolved subnets

subnets:

- id: subnet-0a462d98193ff9fac

zone: us-east-2b

- id: subnet-0322dfafd76a609b6

zone: us-east-2c

- id: subnet-0727ef01daf4ac9fe

zone: us-east-2b

- id: subnet-00c99aeafe2a70304

zone: us-east-2a

- id: subnet-023b232fd5eb0028e

zone: us-east-2c

- id: subnet-03941e7ad6afeaa72

zone: us-east-2a

# Resolved security groups

securityGroups:

- id: sg-041513b454818610b

name: ClusterSharedNodeSecurityGroup

- id: sg-0286715698b894bca

name: ControlPlaneSecurityGroup-1AQ073TSAAPW

# Resolved AMIs

amis:

- id: ami-01234567890123456

name: custom-ami-amd64

requirements:

- key: kubernetes.io/arch

operator: In

values:

- amd64

- id: ami-01234567890123456

name: custom-ami-arm64

requirements:

- key: kubernetes.io/arch

operator: In

values:

- arm64

# Generated instance profile name from "role"

instanceProfile: "${CLUSTER_NAME}-0123456778901234567789"

conditions:

- lastTransitionTime: "2024-02-02T19:54:34Z"

status: "True"

type: InstanceProfileReady

- lastTransitionTime: "2024-02-02T19:54:34Z"

status: "True"

type: SubnetsReady

- lastTransitionTime: "2024-02-02T19:54:34Z"

status: "True"

type: SecurityGroupsReady

- lastTransitionTime: "2024-02-02T19:54:34Z"

status: "True"

type: AMIsReady

- lastTransitionTime: "2024-02-02T19:54:34Z"

status: "True"

type: Ready