1、Istio proxy实现了envoy的采集插件 proxy/extensions/stats/plugin.cc

istio_requests_total{

connection_security_policy="mutual_tls",

destination_app="details",

destination_canonical_service="details",

destination_canonical_revision="v1",

destination_principal="cluster.local/ns/default/sa/default",

destination_service="details.default.svc.cluster.local", //用于定位目标

destination_service_name="details", //用于定位目标

destination_service_namespace="default", //用于定位目标

destination_version="v1",

destination_workload="details-v1",

destination_workload_namespace="default",

reporter="destination",

request_protocol="http",

response_code="200",

response_flags="-",

source_app="productpage", //用于定位源头

source_canonical_service="productpage",

source_canonical_revision="v1",

source_principal="cluster.local/ns/default/sa/default",

source_version="v1",

source_workload="productpage-v1", //用于定位源头

source_workload_namespace="default" //用于定位源头

}

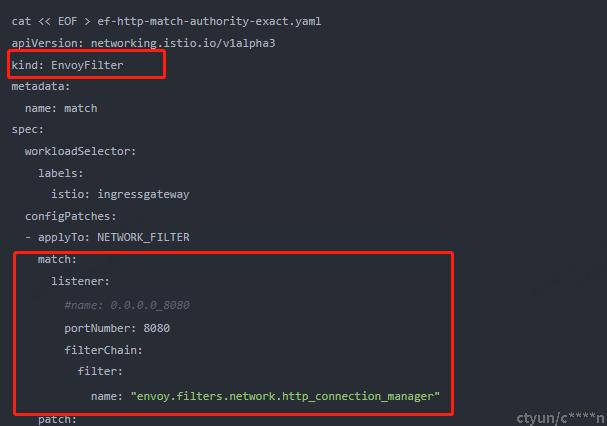

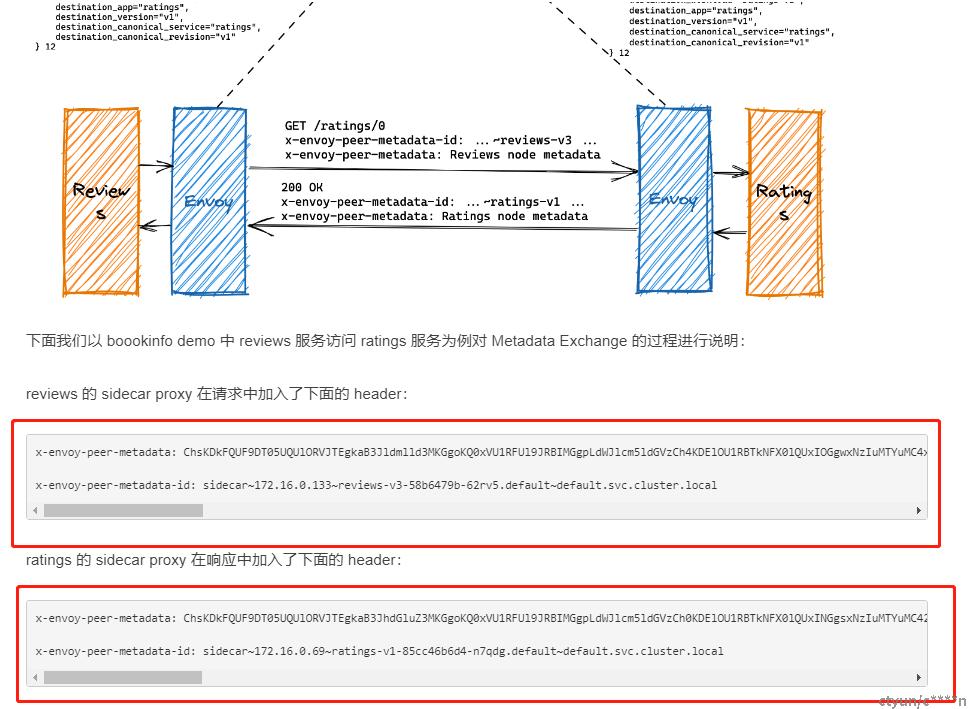

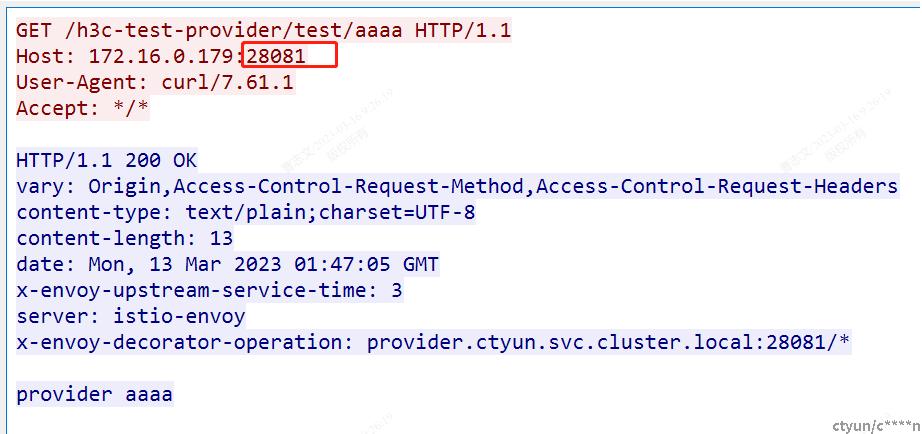

envoy在http请求包头部添加额外数据,在对端进行解析获得destination相关字段信息:

(引用文档 https://www.zhaohuabing.com/post/2023-02-14-istio-metrics-deep-dive/)

2、Istio proxy中完成对metadata解析:(代码来源 istio proxy release 1.14)

constexpr std::string_view ExchangeMetadataHeader = "x-envoy-peer-metadata"; //定义对应字段

constexpr std::string_view ExchangeMetadataHeaderId = "x-envoy-peer-metadata-id"; //定义对应字段

FilterHeadersStatus PluginContext::onRequestHeaders(uint32_t, bool) {

// strip and store downstream peer metadata

auto downstream_metadata_id = getRequestHeader(ExchangeMetadataHeaderId); //获取metadataId

if (downstream_metadata_id != nullptr &&

!downstream_metadata_id->view().empty()) {

removeRequestHeader(ExchangeMetadataHeaderId);

setFilterState(::Wasm::Common::kDownstreamMetadataIdKey, //保存

downstream_metadata_id->view());

} else {

metadata_id_received_ = false;

}

auto downstream_metadata_value = getRequestHeader(ExchangeMetadataHeader); //获取metadata

if (downstream_metadata_value != nullptr &&

!downstream_metadata_value->view().empty()) {

removeRequestHeader(ExchangeMetadataHeader);

if (!rootContext()->updatePeer(::Wasm::Common::kDownstreamMetadataKey, //保存

downstream_metadata_id->view(),

downstream_metadata_value->view())) {

LOG_DEBUG("cannot set downstream peer node");

}

} else {

metadata_received_ = false;

}

// do not send request internal headers to sidecar app if it is an inbound

// proxy

if (direction_ != ::Wasm::Common::TrafficDirection::Inbound) {

auto metadata = metadataValue();

// insert peer metadata struct for upstream

if (!metadata.empty()) {

replaceRequestHeader(ExchangeMetadataHeader, metadata);

}

auto nodeid = nodeId();

if (!nodeid.empty()) {

replaceRequestHeader(ExchangeMetadataHeaderId, nodeid);

}

}

return FilterHeadersStatus::Continue;

} PluginRootContext(uint32_t id, std::string_view root_id, bool is_outbound)

: RootContext(id, root_id), outbound_(is_outbound) {

Metric cache_count(MetricType::Counter, "metric_cache_count",

{MetricTag{"wasm_filter", MetricTag::TagType::String},

MetricTag{"cache", MetricTag::TagType::String}});

cache_hits_ = cache_count.resolve("stats_filter", "hit");

cache_misses_ = cache_count.resolve("stats_filter", "miss");

empty_node_info_ = ::Wasm::Common::extractEmptyNodeFlatBuffer();

if (outbound_) {

peer_metadata_id_key_ = ::Wasm::Common::kUpstreamMetadataIdKey; //解析前先获取

peer_metadata_key_ = ::Wasm::Common::kUpstreamMetadataKey; //解析前先获取

} else {

peer_metadata_id_key_ = ::Wasm::Common::kDownstreamMetadataIdKey; //解析前先获取

peer_metadata_key_ = ::Wasm::Common::kDownstreamMetadataKey; //解析前先获取

}

}Wasm::Common::PeerNodeInfo peer_node_info(peer_metadata_id_key_,

peer_metadata_key_); //封装解析器

map(istio_dimensions_, outbound_, peer_node_info.get(), request_info); //把相关信息map成监控指标 istio_dimensions_

排查过程:

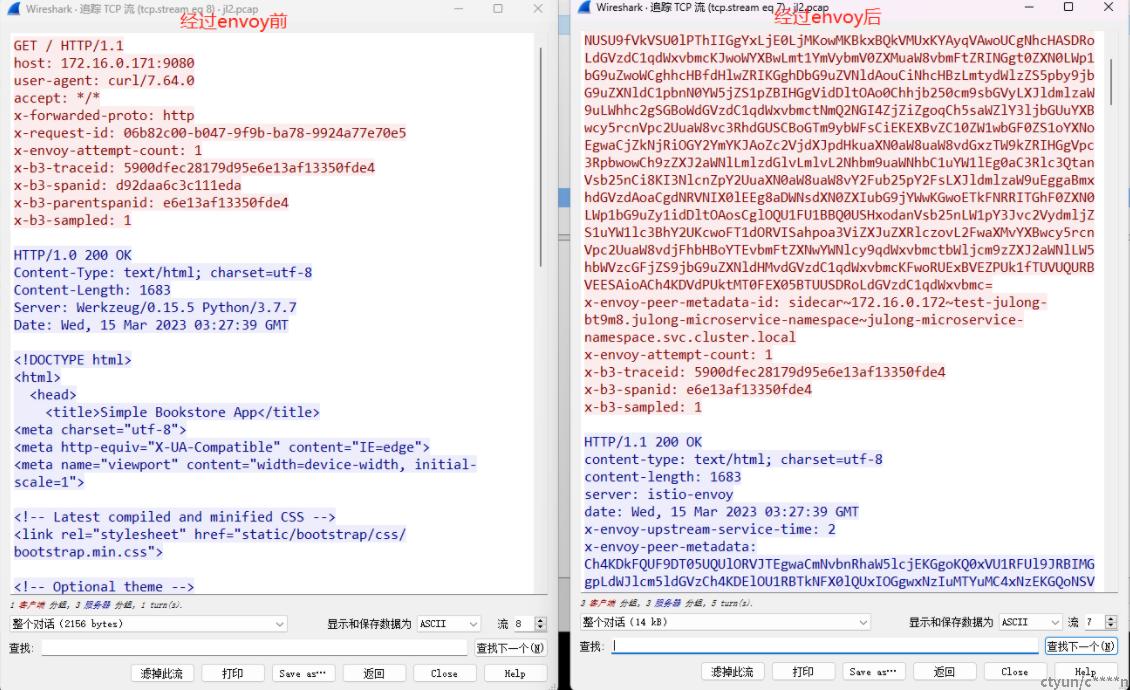

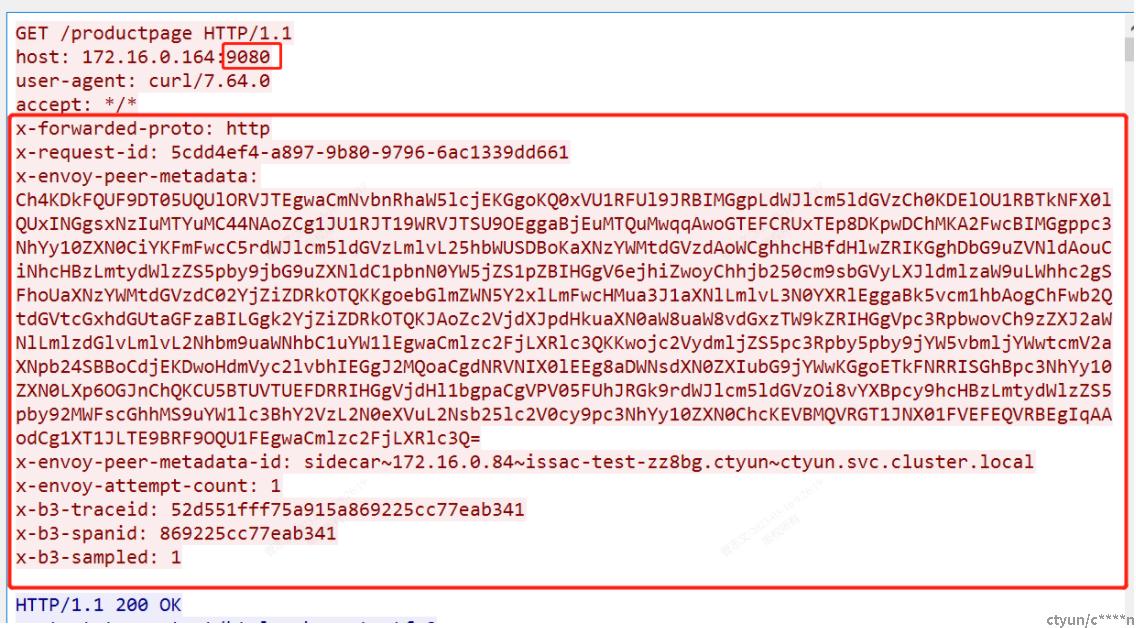

1、首先怀疑出现unknow的访问,是因为header中没有注入x-envoy-peer-matadata 信息

对client和server端抓包分析对对比,确实出现unknow的访问没有注入x-envoy-peer-matadata

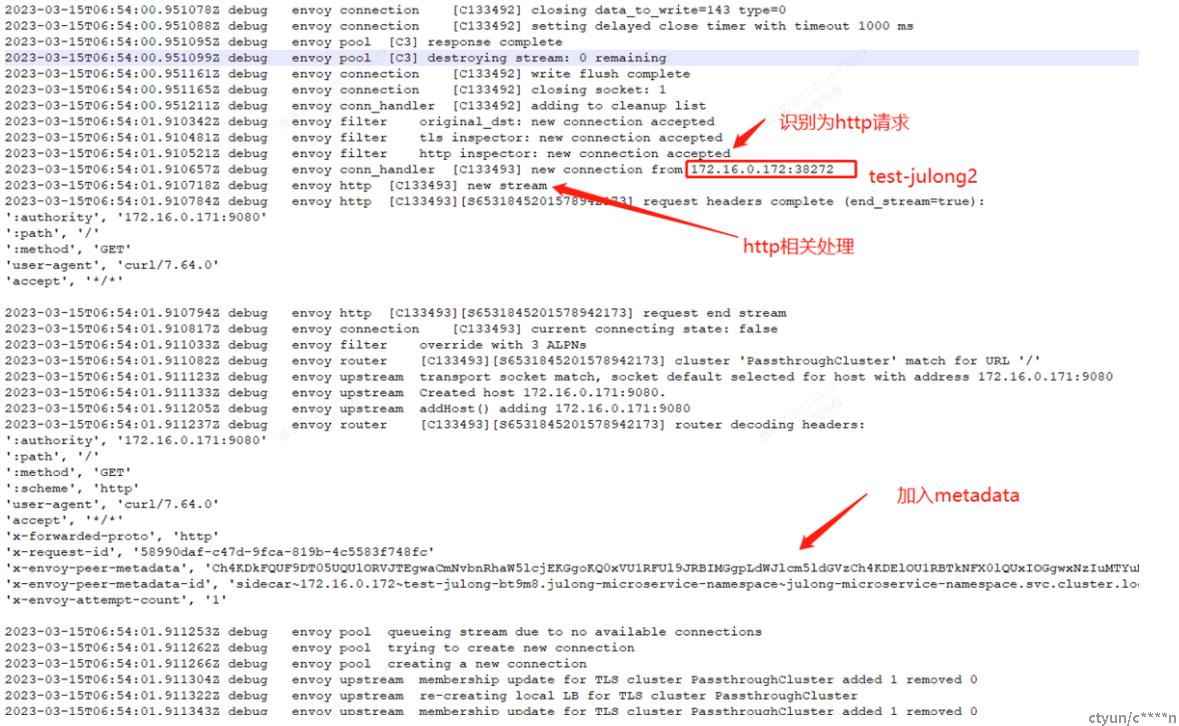

2、开启client istio-proxy日志观察(能不抓包更高效):

istioctl proxy-config log -n julong-microservice-namespace test-julong-bt9m8 --level debug

kubectl logs -n julong-microservice-namespace test-julong-bt9m8 -c istio-proxy -f

发现如果注入和没注入x-envoy-peer-matadata时候,日志流程上也有不同,有注入时流量能进入http filter,无注入时只认为是tcp proxy session

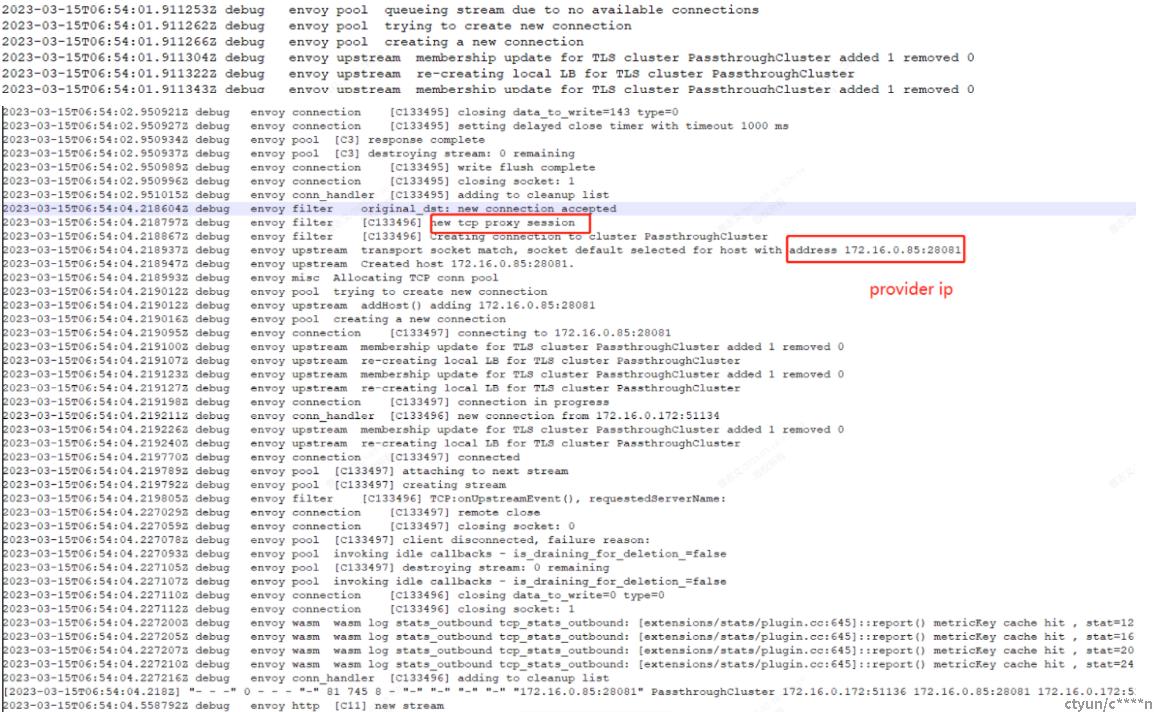

3、为了排查istio内部机制的影响,实验将server部署在k8s机器外的服务器,从集群内pod去访问,观察注入情况

发现访问机器外的服务也有注入

4、排查istio机制的影响后;怀疑点就集中在服务,流量,端口,url等因素。

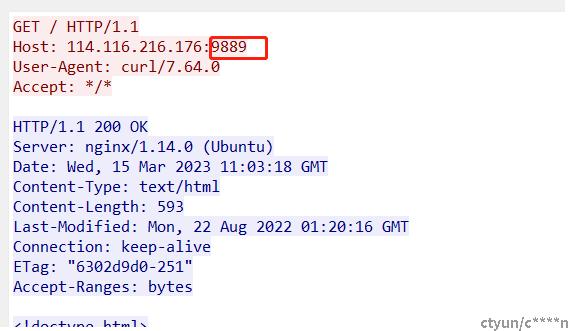

切换服务监听端口发现问题;同为nginx服务,当服务端口是80或9080时有注入;当服务端口非以上端口istio不识别不注入

5、istio为什么只以端口作为协议识别的特征有待考证

有文章提到可以通过EnvoyFilter去配置,也有待进一步验证

https://www.modb.pro/db/128866