这篇文档以tcplife(linux ebpf/bcc)工具的使用为切入点,说明tcplife的实现逻辑,及ebpf/bcc编程的方式。

一 示例

# ./tcplife

PID COMM LADDR LPORT RADDR RPORT TX_KB RX_KB MS

22597 recordProg 127.0.0.1 46644 127.0.0.1 28527 0 0 0.23

3277 redis-serv 127.0.0.1 28527 127.0.0.1 46644 0 0 0.28

22598 curl 100.66.3.172 61620 52.205.89.26 80 0 1 91.79

22604 curl 100.66.3.172 44400 52.204.43.121 80 0 1 121.38

22624 recordProg 127.0.0.1 46648 127.0.0.1 28527 0 0 0.22

3277 redis-serv 127.0.0.1 28527 127.0.0.1 46648 0 0 0.27

22647 recordProg 127.0.0.1 46650 127.0.0.1 28527 0 0 0.21

3277 redis-serv 127.0.0.1 28527 127.0.0.1 46650 0 0 0.26

[...]tcplife总结了在跟踪期间打开和关闭的TCP会话信息,如上。捕获了一个程序“recordProg”,它建立了一些到“redis-rerv”的短暂的TCP连接,每个链接持续大约0.25毫秒。还有几个curl会话也被跟踪,链接到端口80,持续了91和121毫秒。

该工具对工作负载和流量统计很有用:识别正在发生的链接及传输的字节。

这个例子,上传了一个10 Mbyte的文件到服务器,然后再次通过scp下载:

# ./tcplife

PID COMM LADDR LPORT RADDR RPORT TX_KB RX_KB MS

7715 recordProg 127.0.0.1 50894 127.0.0.1 28527 0 0 0.25

3277 redis-serv 127.0.0.1 28527 127.0.0.1 50894 0 0 0.30

7619 sshd 100.66.3.172 22 100.127.64.230 63033 5 10255 3066.79

7770 recordProg 127.0.0.1 50896 127.0.0.1 28527 0 0 0.20

3277 redis-serv 127.0.0.1 28527 127.0.0.1 50896 0 0 0.24

7793 recordProg 127.0.0.1 50898 127.0.0.1 28527 0 0 0.23

3277 redis-serv 127.0.0.1 28527 127.0.0.1 50898 0 0 0.27

7847 recordProg 127.0.0.1 50900 127.0.0.1 28527 0 0 0.24

3277 redis-serv 127.0.0.1 28527 127.0.0.1 50900 0 0 0.29

7870 recordProg 127.0.0.1 50902 127.0.0.1 28527 0 0 0.29

3277 redis-serv 127.0.0.1 28527 127.0.0.1 50902 0 0 0.30

7798 sshd 100.66.3.172 22 100.127.64.230 64925 10265 6 2176.15

[...]可以看到sshd接收到了10MB,然后再传输出去,看起来,接受(3.07秒)比传输(2.18秒)慢。

另外,tcplife还有多个参数可选,如宽显/端口过滤/IP过滤等,如USAGE显示如下:

# ./tcplife.py -h

usage: tcplife.py [-h] [-T] [-t] [-w] [-s] [-p PID] [-L LOCALPORT]

[-D REMOTEPORT] [-4 | -6]

Trace the lifespan of TCP sessions and summarize

optional arguments:

-h, --help show this help message and exit

-T, --time include time column on output (HH:MM:SS)

-t, --timestamp include timestamp on output (seconds)

-w, --wide wide column output (fits IPv6 addresses)

-s, --csv comma separated values output

-p PID, --pid PID trace this PID only

-L LOCALPORT, --localport LOCALPORT

comma-separated list of local ports to trace.

-D REMOTEPORT, --remoteport REMOTEPORT

comma-separated list of remote ports to trace.

-4, --ipv4 trace IPv4 family only

-6, --ipv6 trace IPv6 family only

examples:

./tcplife # trace all TCP connect()s

./tcplife -t # include time column (HH:MM:SS)

./tcplife -w # wider columns (fit IPv6)

./tcplife -stT # csv output, with times & timestamps

./tcplife -p 181 # only trace PID 181

./tcplife -L 80 # only trace local port 80

./tcplife -L 80,81 # only trace local ports 80 and 81

./tcplife -D 80 # only trace remote port 80

./tcplife -4 # only trace IPv4 family

./tcplife -6 # only trace IPv6 family二 实现逻辑

bcc使得ebpf程序更容易被编写,核心部分使用C语言代码,上层使用python及lua实现。tcplife本身为python文件,其中核心部分嵌入了由C语言实现的ebpf逻辑。

bpf_text = """

#include <uapi/linux/ptrace.h>

#include <linux/tcp.h>

#include <net/sock.h>

#include <bcc/proto.h>

BPF_HASH(birth, struct sock *, u64);

// separate data structs for ipv4 and ipv6

struct ipv4_data_t {

u64 ts_us;

u32 pid;

u32 saddr;

u32 daddr;

u64 ports;

u64 rx_b;

u64 tx_b;

u64 span_us;

char task[TASK_COMM_LEN];

};

BPF_PERF_OUTPUT(ipv4_events);

struct ipv6_data_t {

u64 ts_us;

u32 pid;

unsigned __int128 saddr;

unsigned __int128 daddr;

u64 ports;

u64 rx_b;

u64 tx_b;

u64 span_us;

char task[TASK_COMM_LEN];

};

BPF_PERF_OUTPUT(ipv6_events);

struct id_t {

u32 pid;

char task[TASK_COMM_LEN];

};

BPF_HASH(whoami, struct sock *, struct id_t);

"""其中

bpf_text='...' :定义了一个内联的 C语言编写的ebpf程序,以字符串的形式被加载,其中定义了核心的统计逻辑。

BPF_HASH(birth, struct sock *, u64):创建一个名为birth的BPF hash映射,structural sock和u64分别为key和value的类型。用于保存每个sock的相关信息。

struct ipv4_data_t:为自定义的C结构体,用于从内核态向用户态传输数据。

BPF_PERF_OUTPUT(ipv4_events): 创建一个通道,内核传出的信息将会被打印到“ipv4_events”这个通道中(实际上是bpf对象的一个key,可以通过bpf_object[“ipv4_events”]方式获取)。

if (BPF.tracepoint_exists("sock", "inet_sock_set_state")):

bpf_text += bpf_text_tracepoint

else:

bpf_text += bpf_text_kprobe该部分说明,tcplife统计部分有两种实现方式,在Linux kernel4.16及更高版本中,采用TRACEPOINT_PROBE方式实现,低版本通过kprobe tcp_set_state()方式实现。本文以TRACEPOINT_PROBE实现方式进行分析。

TRACEPOINT_PROBE(sock, inet_sock_set_state)

{

if (args->protocol != IPPROTO_TCP)

return 0;

u32 pid = bpf_get_current_pid_tgid() >> 32;

// sk is mostly used as a UUID, and for two tcp stats:

struct sock *sk = (struct sock *)args->skaddr;

// lport is either used in a filter here, or later

u16 lport = args->sport;

FILTER_LPORT

// dport is either used in a filter here, or later

u16 dport = args->dport;

FILTER_DPORT

/*

* This tool includes PID and comm context. It's best effort, and may

* be wrong in some situations. It currently works like this:

* - record timestamp on any state < TCP_FIN_WAIT1

* - cache task context on:

* TCP_SYN_SENT: tracing from client

* TCP_LAST_ACK: client-closed from server

* - do output on TCP_CLOSE:

* fetch task context if cached, or use current task

*/

// capture birth time

if (args->newstate < TCP_FIN_WAIT1) {

/*

* Matching just ESTABLISHED may be sufficient, provided no code-path

* sets ESTABLISHED without a tcp_set_state() call. Until we know

* that for sure, match all early states to increase chances a

* timestamp is set.

* Note that this needs to be set before the PID filter later on,

* since the PID isn't reliable for these early stages, so we must

* save all timestamps and do the PID filter later when we can.

*/

u64 ts = bpf_ktime_get_ns();

birth.update(&sk, &ts);

}

// record PID & comm on SYN_SENT

if (args->newstate == TCP_SYN_SENT || args->newstate == TCP_LAST_ACK) {

// now we can PID filter, both here and a little later on for CLOSE

FILTER_PID

struct id_t me = {.pid = pid};

bpf_get_current_comm(&me.task, sizeof(me.task));

whoami.update(&sk, &me);

}

if (args->newstate != TCP_CLOSE)

return 0;

// calculate lifespan

u64 *tsp, delta_us;

tsp = birth.lookup(&sk);

if (tsp == 0) {

whoami.delete(&sk); // may not exist

return 0; // missed create

}

delta_us = (bpf_ktime_get_ns() - *tsp) / 1000;

birth.delete(&sk);

// fetch possible cached data, and filter

struct id_t *mep;

mep = whoami.lookup(&sk);

if (mep != 0)

pid = mep->pid;

FILTER_PID

u16 family = args->family;

FILTER_FAMILY

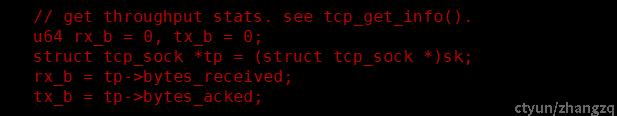

// get throughput stats. see tcp_get_info().

u64 rx_b = 0, tx_b = 0;

struct tcp_sock *tp = (struct tcp_sock *)sk;

rx_b = tp->bytes_received;

tx_b = tp->bytes_acked;

if (args->family == AF_INET) {

struct ipv4_data_t data4 = {};

data4.span_us = delta_us;

data4.rx_b = rx_b;

data4.tx_b = tx_b;

data4.ts_us = bpf_ktime_get_ns() / 1000;

__builtin_memcpy(&data4.saddr, args->saddr, sizeof(data4.saddr));

__builtin_memcpy(&data4.daddr, args->daddr, sizeof(data4.daddr));

// a workaround until data4 compiles with separate lport/dport

data4.ports = dport + ((0ULL + lport) << 32);

data4.pid = pid;

if (mep == 0) {

bpf_get_current_comm(&data4.task, sizeof(data4.task));

} else {

bpf_probe_read_kernel(&data4.task, sizeof(data4.task), (void *)mep->task);

}

ipv4_events.perf_submit(args, &data4, sizeof(data4));

} else /* 6 */ {

struct ipv6_data_t data6 = {};

data6.span_us = delta_us;

data6.rx_b = rx_b;

data6.tx_b = tx_b;

data6.ts_us = bpf_ktime_get_ns() / 1000;

__builtin_memcpy(&data6.saddr, args->saddr_v6, sizeof(data6.saddr));

__builtin_memcpy(&data6.daddr, args->daddr_v6, sizeof(data6.daddr));

// a workaround until data6 compiles with separate lport/dport

data6.ports = dport + ((0ULL + lport) << 32);

data6.pid = pid;

if (mep == 0) {

bpf_get_current_comm(&data6.task, sizeof(data6.task));

} else {

bpf_probe_read_kernel(&data6.task, sizeof(data6.task), (void *)mep->task);

}

ipv6_events.perf_submit(args, &data6, sizeof(data6));

}

if (mep != 0)

whoami.delete(&sk);

return 0;

}

"""其中:

TRACEPOINT_PROBE(sock, inet_sock_set_state):表示跟踪内核tracepoint函数sock:inet_sock_set_state。可以通过perf list命令列出有哪些可用的tracepoints。

args->skaddr/sport/dport:等为该tracepoint的参数,可通过下面方式查看

[root@bogon tools]# cat /sys/kernel/debug/tracing/events/sock/inet_sock_set_state/format

name: inet_sock_set_state

ID: 1310

format:

field:unsigned short common_type; offset:0; size:2; signed:0;

field:unsigned char common_flags; offset:2; size:1; signed:0;

field:unsigned char common_preempt_count; offset:3; size:1; signed:0;

field:int common_pid; offset:4; size:4; signed:1;

field:const void * skaddr; offset:8; size:8; signed:0;

field:int oldstate; offset:16; size:4; signed:1;

field:int newstate; offset:20; size:4; signed:1;

field:__u16 sport; offset:24; size:2; signed:0;

field:__u16 dport; offset:26; size:2; signed:0;

field:__u16 family; offset:28; size:2; signed:0;

field:__u16 protocol; offset:30; size:2; signed:0;

field:__u8 saddr[4]; offset:32; size:4; signed:0;

field:__u8 daddr[4]; offset:36; size:4; signed:0;

field:__u8 saddr_v6[16]; offset:40; size:16; signed:0;

field:__u8 daddr_v6[16]; offset:56; size:16; signed:0;bpf_ktime_get_ns():bpf API,以纳秒为单位返回当前时间。保存对应sock的时间戳,并以sk为key保存到对应hash中。

bpf_get_current_pid_tgid/bpf_get_current_comm():返回当前pid及进程名信息。然后以sk为key保存到对应hash中。

当state状态为TCP_CLOSE时,统计对应sock用时及rx_b/tx_b报文信息。

通过上图可以看出,tcplife统计流量信息,是通过tcp_sock直接拿的数据,而不需要过滤每个数据包,所以你性能开销非常小。

def print_ipv4_event(cpu, data, size):

event = b["ipv4_events"].event(data)

global start_ts

if args.time:

if args.csv:

print("%s," % strftime("%H:%M:%S"), end="")

else:

print("%-8s " % strftime("%H:%M:%S"), end="")

if args.timestamp:

if start_ts == 0:

start_ts = event.ts_us

delta_s = (float(event.ts_us) - start_ts) / 1000000

if args.csv:

print("%.6f," % delta_s, end="")

else:

print("%-9.6f " % delta_s, end="")

print(format_string % (event.pid, event.task.decode('utf-8', 'replace'),

"4" if args.wide or args.csv else "",

inet_ntop(AF_INET, pack("I", event.saddr)), event.ports >> 32,

inet_ntop(AF_INET, pack("I", event.daddr)), event.ports & 0xffffffff,

event.tx_b / 1024, event.rx_b / 1024, float(event.span_us) / 1000))

...

b = BPF(text=bpf_text)

b["ipv4_events"].open_perf_buffer(print_ipv4_event, page_cnt=64)

while 1:

try:

b.perf_buffer_poll()

except KeyboardInterrupt:

exit()print_ipv4_event(cpu, data, size):定义一个函数从ipv4_events流中读取event,这里的 cpu, data, size 是默认的传入内容,连接到流上的函数必须要有这些参数。

b = BPF():加载bpf程序,并返回一个bpf object。

b["ipv4_events"].event(data):通过pyton从ipv4_events中获取event

b["ipv4_events"].open_perf_buffer(print_ipv4_event, page_cnt=64):将print_ipv4_event函数连接在ipv4_events流上。

while 1: b.perf_buffer_pull()阻塞的循环获取结果。